This article briefly introduces the principle, functions, uses, and usage methods of "video translation dubbing software." The main content includes:

- What is this thing, and what is it used for?

- How to download, install, and update

- Where to download models

- How to choose a translation channel

- What is a proxy, and is it necessary?

- How to use it specifically

- How to use CUDA acceleration

- How to use the original video's voice for dubbing

- How to use GPT-SoVIT dubbing

- What to do when encountering problems

- Is there a charge, and are there any restrictions?

- Will the project die?

- Can I modify the source code?

What is this thing, and what is it used for?

This is an open-source video translation dubbing tool (open-source protocol GPL-v3), which can translate a video with audio in one language into a video with audio in another language and embed subtitles in that language. For example, if you have an English movie with English audio and no English or Chinese subtitles, you can use this tool to convert it into a movie with Chinese subtitles and Chinese dubbing.

Open-source address: https://github.com/jianchang512/pyvideotrans

In addition to this core function, it also comes with some other tools:

- Speech to Text: Converts the audio in a video or audio file into text and exports it as a subtitle file.

- Audio and Video Separation: Separates a video into a silent video file and an audio file.

- Text Subtitle Translation: Translates text or SRT subtitle files into text or subtitles in other languages.

- Video Subtitle Merging: Embeds a subtitle file into a video.

- Audio, Video, and Subtitle Merging: Combines a video file, an audio file, and a subtitle file into one file.

- Text to Speech: Synthesizes any text or SRT file into an audio file.

- Vocal and Background Separation: Separates human voices from other sounds in a video into two audio files.

- Download YouTube Videos: Downloads YouTube videos online.

What is the principle behind this tool?

The original video is first separated into an audio file and a silent MP4 using ffmpeg. Then, the human voice in the audio is recognized using the openai-whisper/faster-whisper model and saved as an SRT subtitle file. Next, the SRT subtitle file is translated into the target language and saved as an SRT subtitle file. Finally, the translation result is synthesized into a dubbed audio file.

Then, the dubbed audio file, the subtitle SRT file, and the original silent MP4 are merged into a video file to complete the translation.

Of course, the intermediate steps are more complex, such as extracting background music and human voices, aligning subtitles with sound and image, voice cloning, and CUDA acceleration.

Can I deploy from source code?

Yes, and MacOS and Linux systems do not provide pre-packaged versions and can only be deployed using source code. Please see the repository page for details: https://github.com/jianchang512/pyvideotrans

How to download, install, and update

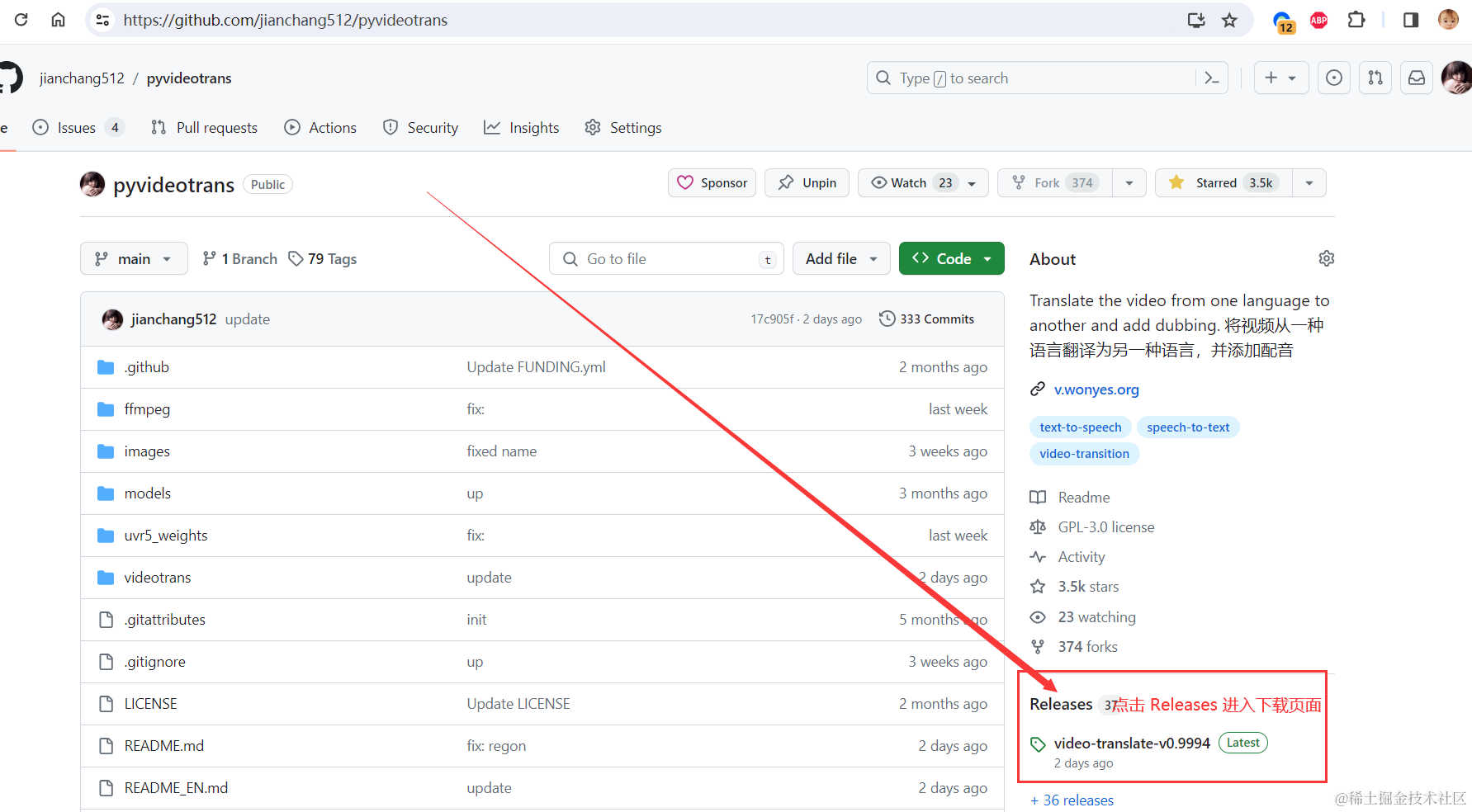

Download from GitHub This is an open-source project on GitHub, so the preferred download address is naturally GitHub: https://github.com/jianchang512/pyvideotrans/releases. After opening, select the topmost download.

If you go through the homepage, such as the address https://github.com/jianchang512/pyvideotrans, after opening, click on the "Releases" text on the right side of the middle of the page to see the download page above.

Updating is very simple. Just go to the download page and see if the latest version is newer than the one you are currently using. If it is, re-download it, and then unzip and overwrite it.

Download and Install from the Documentation Site

Of course, an easier way is to go to the documentation site and click download directly: https://pyvideotrans.com

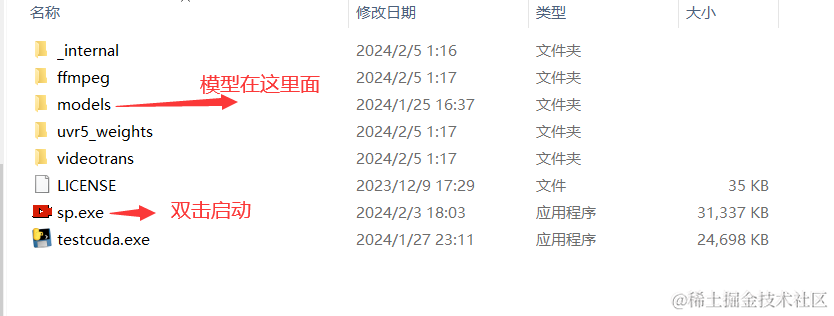

Double-click sp.exe after unzipping to open and use:

Unzip to a directory with English or numbers. It is best not to contain Chinese or spaces, otherwise some strange problems may occur.

The list of files after unzipping is as follows:

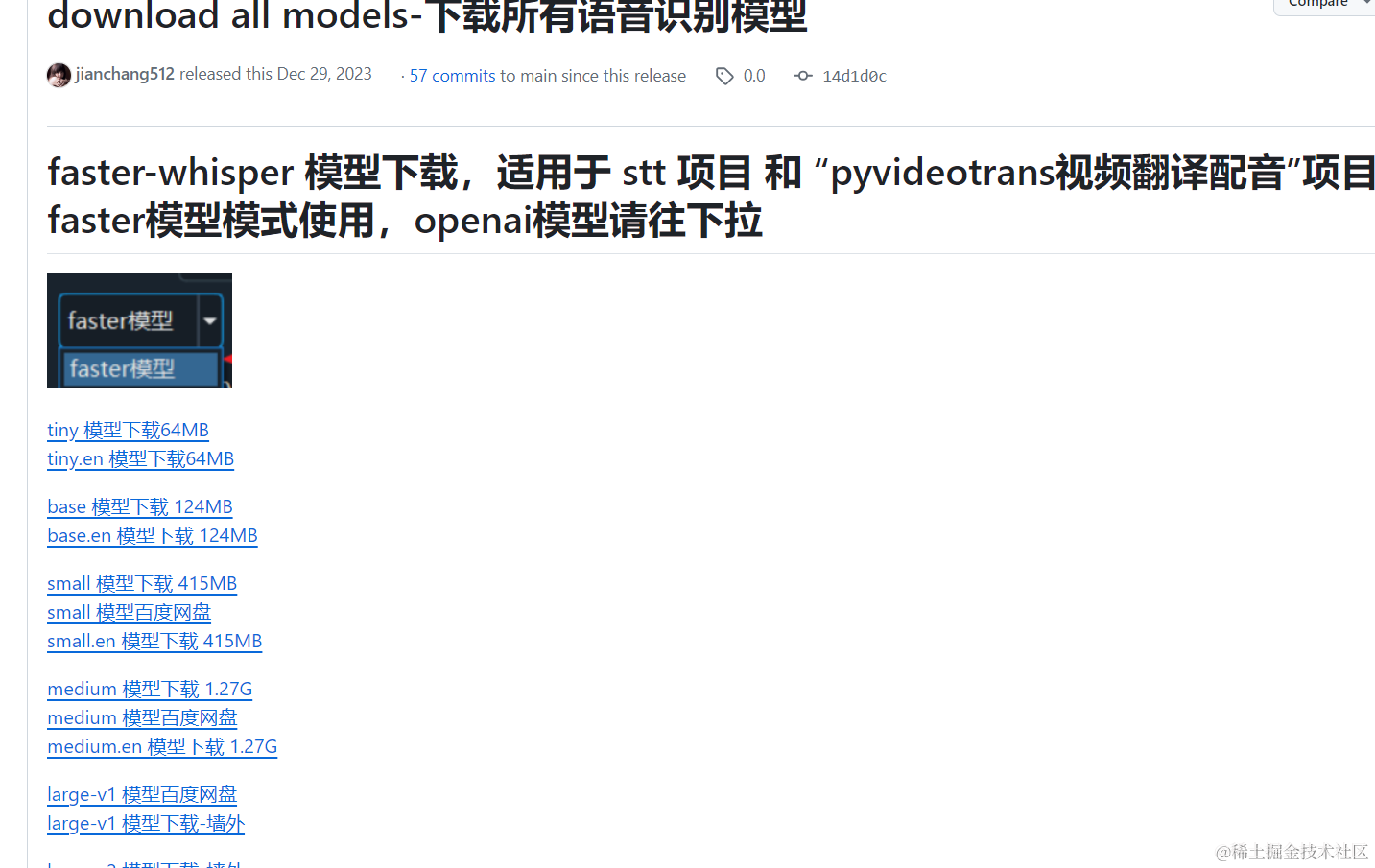

Where to download models

The tiny model is built-in by default. This is the smallest and fastest model, but it is also the least accurate. If you need other models, please go to this page to download them: https://github.com/jianchang512/stt/releases/tag/0.0

How to choose a translation channel

After recognizing the subtitles, if you need to convert the subtitles into subtitles in another language, such as if the original is an English video and you want to embed Chinese subtitles after processing, you need to use a translation channel.

Currently supported: Microsoft Translate, Google Translate, Baidu Translate, Tencent Translate, DeepL Translate, ChatGPT Translate, AzureGPT Translate, Gemini Pro Translate, DeepLx Translate, OTT Offline Translate, FreeGoogle Translate, FreeChatGPT Translate

FreeChatGPT Translate

This is a free ChatGPT translation interface sponsored by apiskey.top. No SK or configuration is required. Just select it to use. It is based on the 3.5 model.

FreeGoogle Translate: This is a channel that reverse proxies Google Translate. It can be accessed and used without a proxy, but there are request limits. It is recommended for novice users who do not know how to configure a proxy. If other users want to use Google Translate, please fill in the network proxy address.

DeepL Translate: This translation effect should be the best, even better than ChatGPT. Unfortunately, it is impossible to purchase the paid version in China, and the free version is difficult to call with an API. DeepLx is a tool to get DeepL for free, but local deployment is basically unusable. Because there are many subtitles and multi-threaded translation, the IP is easily blocked and restricted. You can consider deploying on Tencent Cloud to reduce the error rate.

https://juejin.cn/user/4441682704623992/posts

Microsoft Translate: It is completely free without a proxy, but frequent use may still cause IP restrictions.

Google Translate: If you have a proxy and know what a proxy is and how to fill in the proxy, then it is recommended to choose Google Translate first. It is free and the effect is great. You only need to fill in the proxy address in the text box.

View this method, a small tool - use Google Translate directly without a proxy

Tencent Translate: If you don't know anything about proxies, then don't bother. Go apply for a free Tencent Translate. Click here to view Tencent Translate API Application. The first 5 million characters per month are free.

Baidu Translate: You can also apply for a Baidu Translate API. Click here to view Baidu Translate API Application. If you have not completed authentication, you have 50,000 free characters per month. If you complete personal authentication, you have 1 million free characters per month.

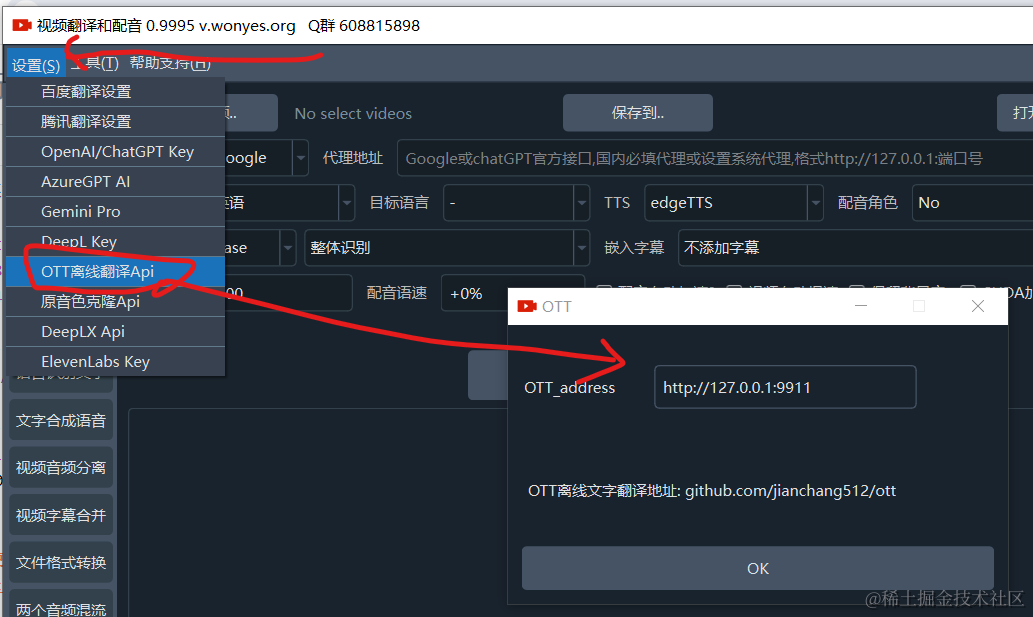

Using OTT Offline Translate: If you are willing to tinker, you can choose to deploy free OTT offline translation. The download address is https://github.com/jianchang512/ott. After deployment, fill in the address in the software menu - Settings - OTT Offline Translate.

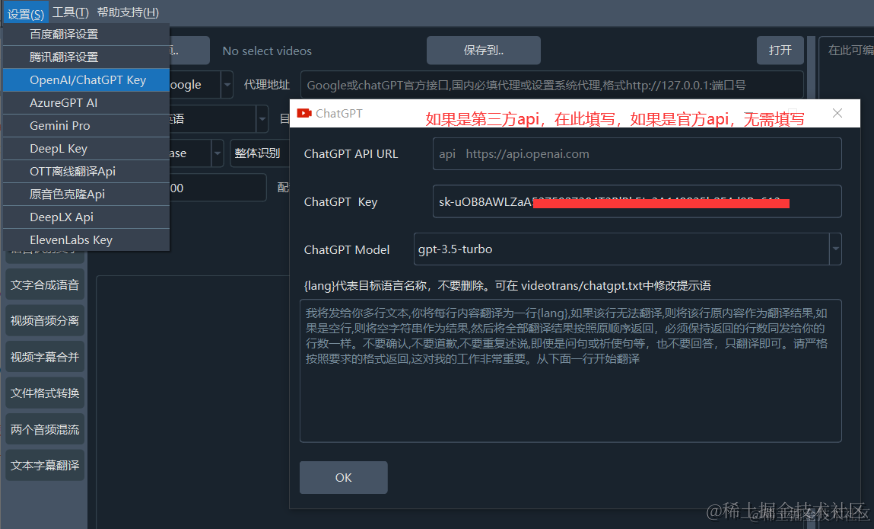

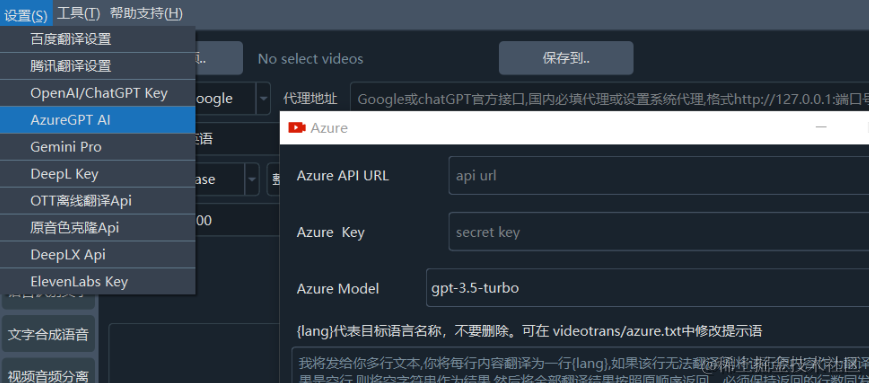

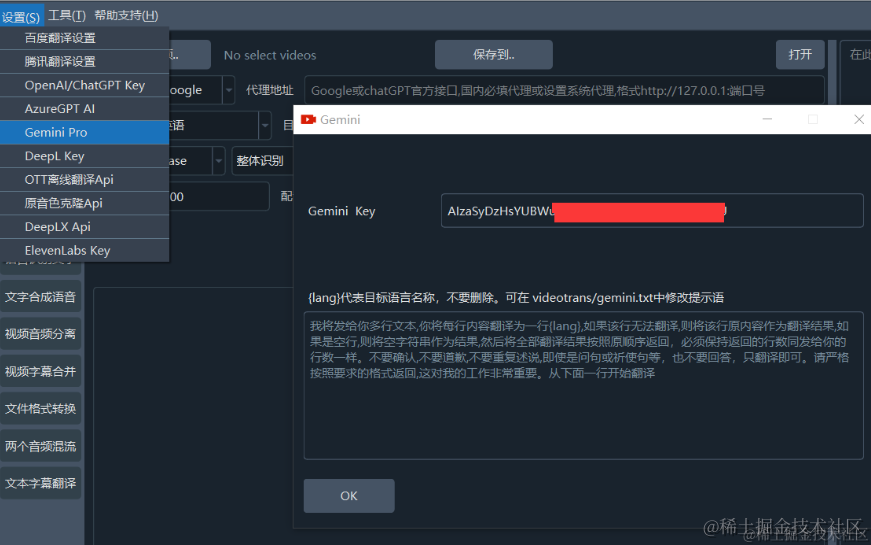

Using AI Translation ChatGPT / Azure / Gemini:

ChatGPT and AzureGPT must have their paid accounts. Free accounts are not available. After you have an account, open the menu - Settings - OpenAI/chatGPT key and fill in your ChatGPT SK value. AzureGPT and Gemini also fill in the menu - Settings.

Note that if you are using the official ChatGPT API, you do not need to fill in the "API URL". If it is a third-party API, fill in the API address provided by the third party.

ChatGPT Access Guide: Quickly obtain and configure API keys and fill them into the software/tool for use https://juejin.cn/post/7342327642852999168

OpenAI's official ChatGPT and Gemini/AzureGPT must fill in a proxy, otherwise they cannot be accessed.

AzureGPT is also filled in here.

Gemini is currently free. It is available after filling in the API key and setting the proxy correctly.

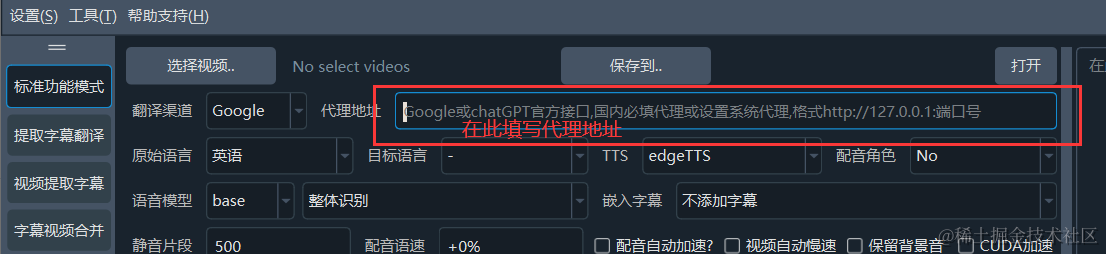

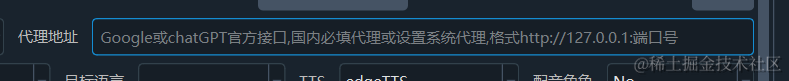

What is a proxy, and is it necessary?

If you want to use Google Translate or use the official ChatGPT API or use Gemini/AzureGPT, then a proxy is required. You need to fill in a proxy address in this format http://127.0.0.1:port number in the proxy address box. Please note that the port number must be an "http type port, not a sock port".

For example, if you are using a certain software, fill in http://127.0.0.1:10809. If it is a certain software, fill in http://127.0.0.1:7890. If you are using a proxy but don't know what to fill in, open the lower left or upper right part of the software or look carefully for the http word followed by a 4-5 digit number, and then fill in http://127.0.0.1:port number.

If you don't understand what a proxy is at all, due to reasons you know, it is not convenient to say more, please Baidu it yourself.

Please note: The proxy address does not need to be filled in if it is not used, but do not fill it in randomly, especially do not fill in the API address here.

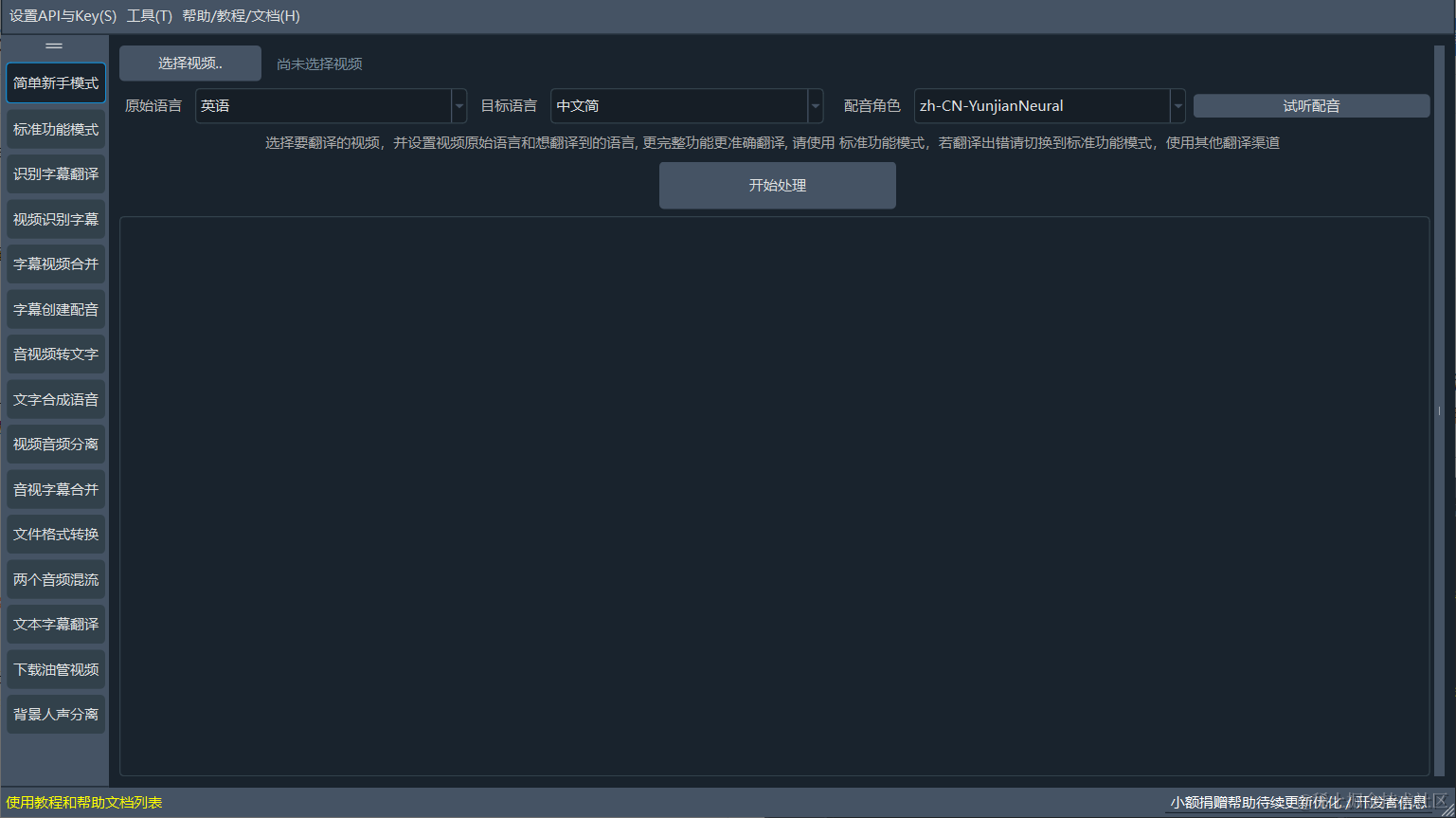

How to use it specifically

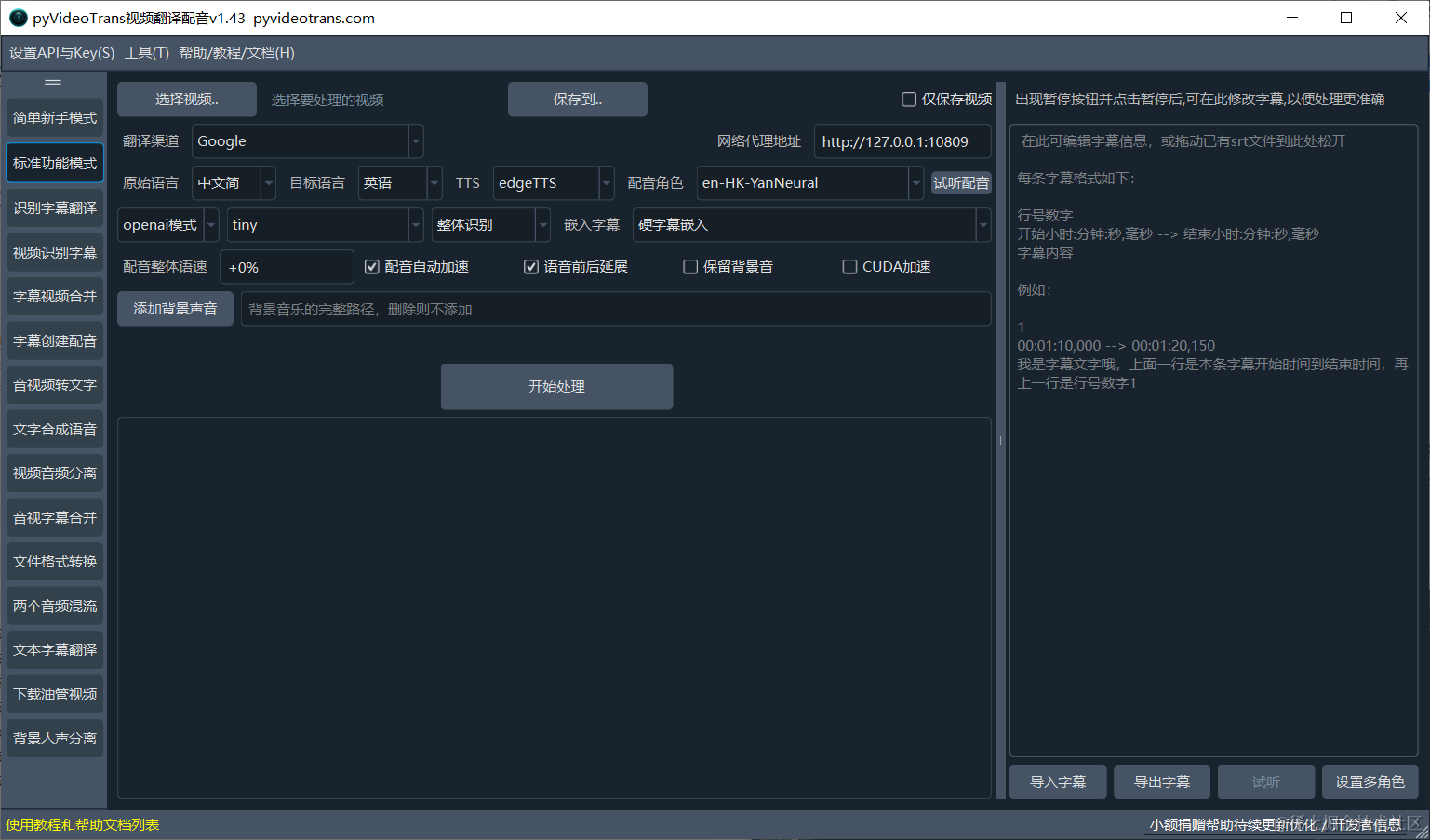

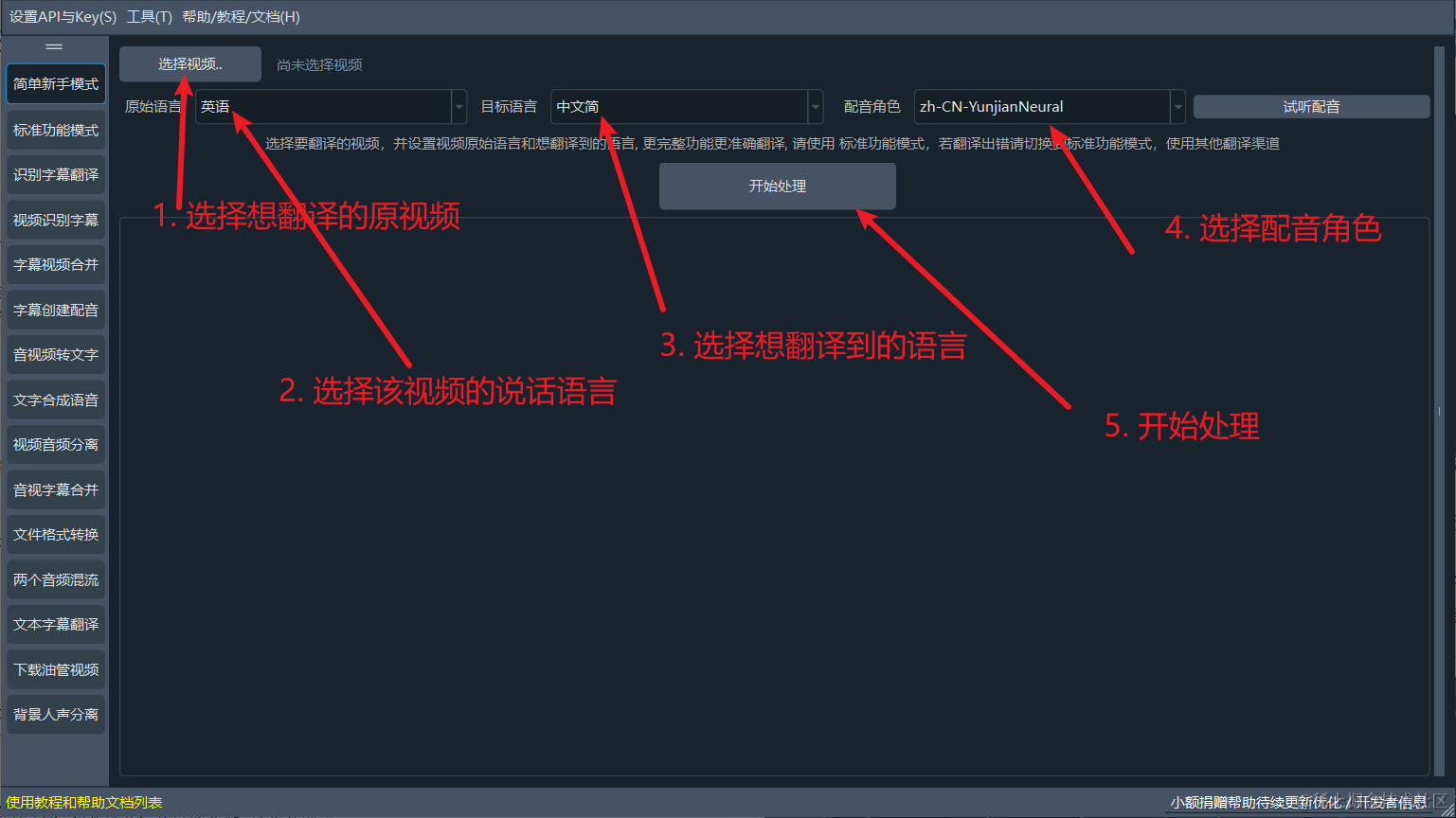

Double-click sp.exe to open the software. The default interface is as follows:

The first one selected on the left by default is the simple novice mode, which is convenient for novice users to quickly experience it. Most of the options have been set by default.

Of course, you can choose the standard function mode to achieve high customization and complete the entire process of video translation + dubbing + embedding subtitles. The other buttons on the left are actually split from this function or other simple auxiliary functions. Let's demonstrate how to use the simple novice mode.

How to use CUDA acceleration

How to use the original video's voice for dubbing

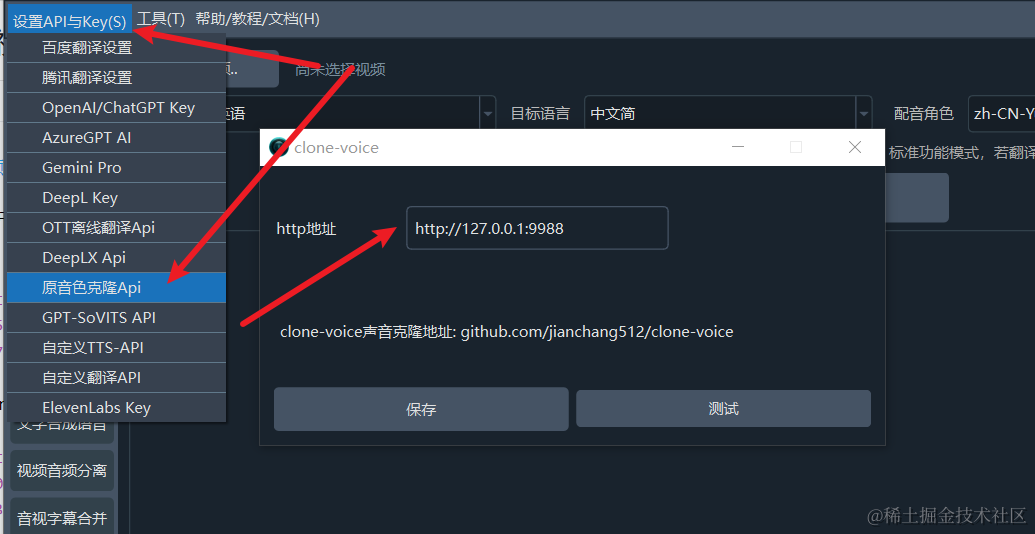

First, you need another open-source project: clone-voice: https://github.com/jianchang512/clone-voice After installing, deploying, and configuring the model, fill in the address of the project in the software menu - Settings - Original Voice Clone API.

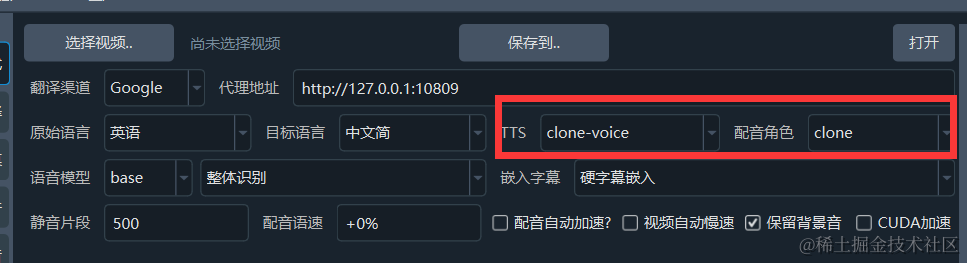

Then select "clone-voice" for TTS and "clone" for the dubbing role to use it.

How to use GPT-SoVIT dubbing

The software now supports using GPT-SoVITS for dubbing. After deploying GPT-SoVITS, start the API service, and then fill in the address in the Video Translation Software Settings menu - GPT-SOVITS.

You can check these two articles for details:

Call GPT-SoVITS in other software to synthesize text into speech https://juejin.cn/post/7341401110631350324

API improvement and use of the GPT-SoVITS project https://juejin.cn/post/7343138052973297702

What to do when encountering problems

First, read the project main page carefully: https://github.com/jianchang512/pyvideotrans Most of the problems are explained.

Secondly, you can visit the documentation website: https://pyvideotrans.com

Again, if you still can't solve it, then issue an Issue. Go here: https://github.com/jianchang512/pyvideotrans/issues to submit it. Of course, there is also a QQ group on the project main page: https://github.com/jianchang512/pyvideotrans. You can join the group.

It is recommended to follow my WeChat official account (pyvideotrans). They are all original articles written by me on the software's usage tutorials and common problems, as well as related tips. Due to limited energy, the tutorials of this project are only published on my Juejin blog and WeChat official account. GitHub and the documentation website will not be updated frequently.

Search for the official account in WeChat Search: pyvideotrans

Is there a charge, and are there any restrictions?

The project is open source under the GPL-v3 protocol, free to use, with no built-in charges or restrictions (must comply with Chinese law). You are free to use it. Of course, Tencent Translate, Baidu Translate, DeepL Translate, ChatGPT, and AzureGPT are chargeable, but this has nothing to do with me. They don't give me any money either.

Will the project die?

There are no projects that will not die. There are only long-lived and short-lived projects. Projects that only rely on love to generate electricity may die earlier. Of course, if you want it to die slower and live longer, and to receive effective continuous maintenance and optimization in the process of survival, you can consider donating to help it continue its life for a few days.

Can I modify the source code?

The source code is completely open, can be deployed locally, and can be modified and used by yourself. However, note that the source code's open-source protocol is GPL-v3. If you integrate the source code into your project, your project must also be open source to not violate the open-source protocol.