本文简要介绍下“视频翻译配音软件”的原理、功能、作用与使用方法,主要内容有:

- 这是个什么东东,有什么用处

- 如何下载、安装、更新

- 在哪里下载模型

- 翻译渠道怎么选

- 代理是什么,必须有吗

- 具体如何使用

- 如何使用CUDA加速

- 如何使用原视频中音色配音

- 如何使用GPT-SoVIT配音

- 遇到问题怎么办

- 是否收费、有无限制

- 项目会死吗

- 是否可以使用该源码修改

这是个什么东东,有什么用处

这是一个开源的视频翻译配音工具(开源协议GPL-v3),可将一种语言发音的视频,翻译为另一种语言发音的视频,并嵌入该语言字幕。比如有一个英文电影,发音是英文,没有英文字幕,也没有中文字幕,使用这个工具处理后,可以转成带中文字幕,并且带有中文配音的电影。

除了这个核心功能,还附带其他一些工具:

- 语音识别文字:可将视频或者音频中的声音识别为文字,并导出为字幕文件。

- 音频视频分离:可将视频分离为一个无声视频文件和一个音频文件

- 文字字幕翻译:可将文字或srt字幕文件,翻译为其他语言的文字或字幕

- 视频字幕合并:可将字幕文件嵌入到视频中

- 音视字幕合并:可将视频文件、音频文件、字幕文件三者合成为一个文件

- 文字合成语音:可将任意文字或srt文件,合成为一个音频文件。

- 人声背景分离:可将视频里的人类声音和其他声音分开为2个音频文件

- 下载油管视频:可在线下载Youtube视频

这个工具是什么原理呢:

将原始视频先使用 ffmpeg 分离出音频文件和无声mp4,然后利用 openai-whisper/faster-whisper模型识别出音频中的人声,并保存为srt字幕,接着将srt字幕翻译为目标语言并保存为srt字幕文件,再将翻译结果合成为配音音频文件。

接着将配音音频文件、字幕srt文件、原无声mp4合并为一个视频文件,即完成翻译。

当然中间步骤更复杂些,比如抽离背景音乐和人声、字幕声音画面对齐、音色克隆、CUDA加速等。

是否可以源码部署

可以,并且MacOS和Linux系统不提供预打包版,只能使用源码部署,具体请查看仓库页面 https://github.com/jianchang512/pyvideotrans

如何下载、安装、更新

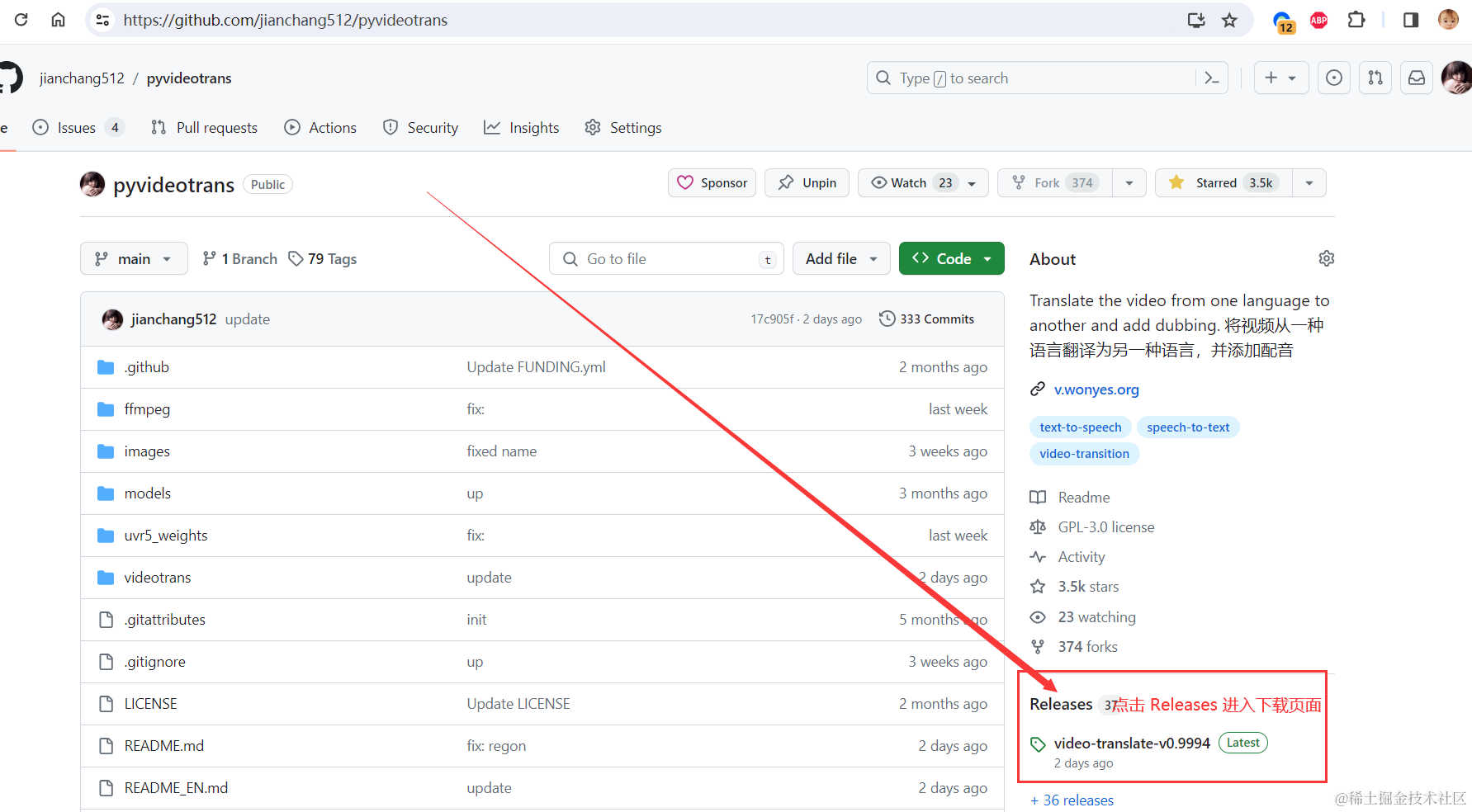

从GitHub下载 这是个开源在 GitHub 的项目,首选下载地址自然是 GitHub: https://github.com/jianchang512/pyvideotrans/releases ,打开后,选择最上面的下载即可

如果你是通过主页过去的,比如地址 https://github.com/jianchang512/pyvideotrans ,打开后在页面中部右侧“Releases”字样的地方点击,即可看到上面下载页面

更新很简单啊,还是去下载页面,看看最新版本是不是比你当前使用的更新,如果是,重新下载,然后解压覆盖就OK了

从文档站下载安装

当然更简单的方式是去文档站直接点击下载 https://pyvideotrans.com

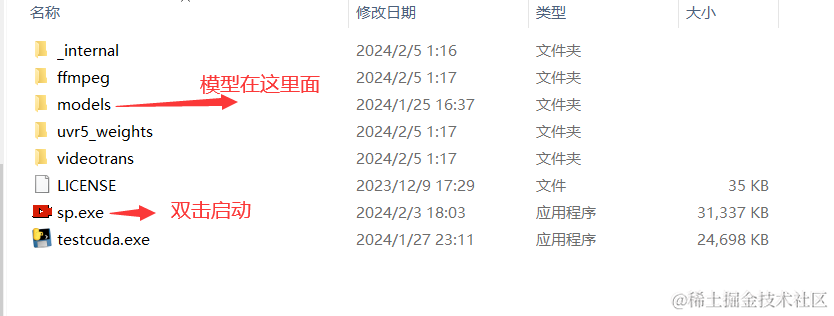

解压后双击 sp.exe 即可打开使用:

解压到英文或数字目录下,最好不要含有中文或空格,否则可能出现一些奇怪的问题。

解压后的文件列表如下

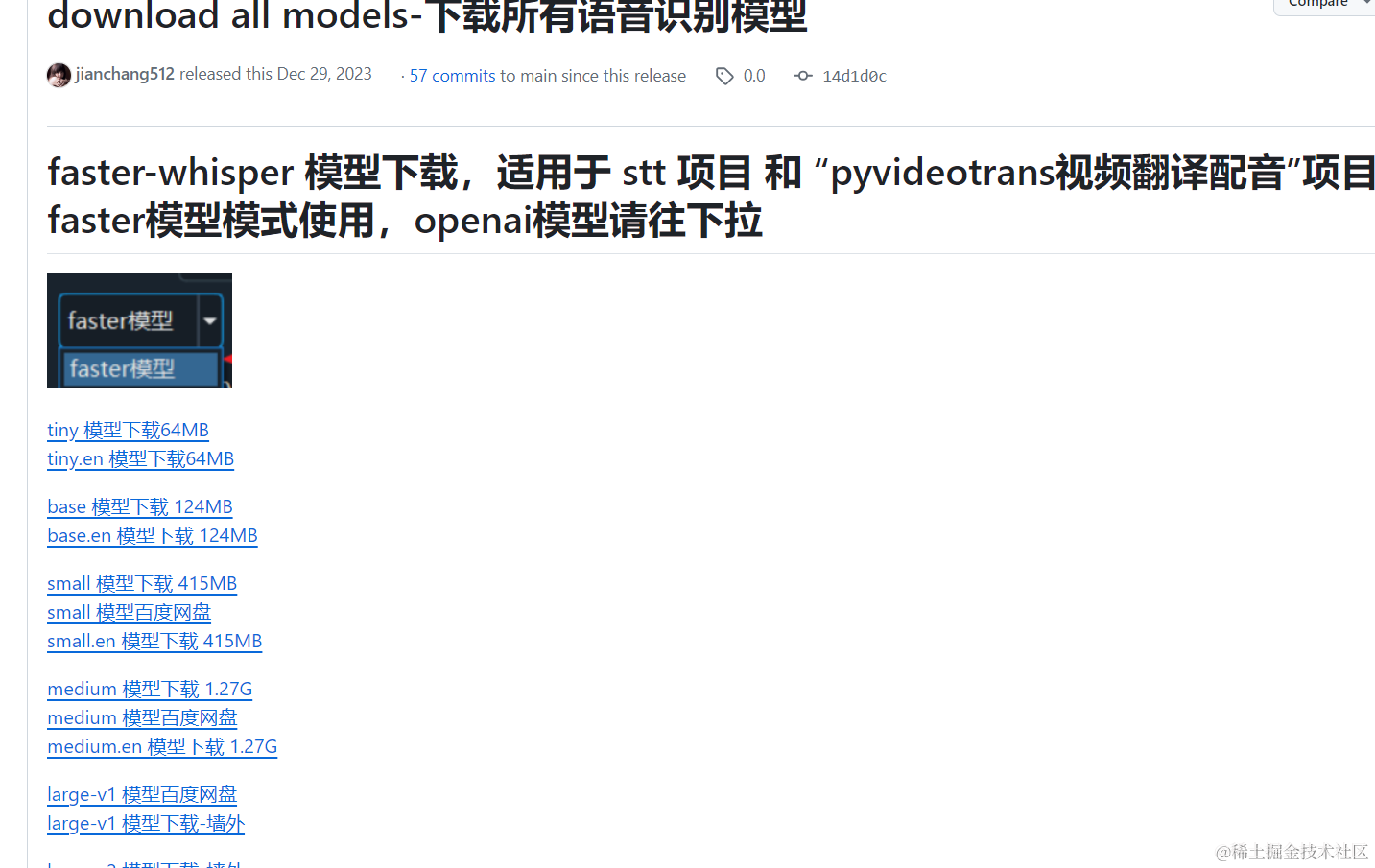

在哪里下载模型

默认已内置 tiny 模型,这是最小最快的模型,同时也是最不精确的模型,如果你需要其他模型,请去该页面下载https://github.com/jianchang512/stt/releases/tag/0.0

翻译渠道怎么选

在识别出字幕后,如果需要将该字幕转为另一种语言的字幕,比如原本是英语视频,你希望处理后能嵌入中文字幕,那么就需要用到翻译渠道了。

目前支持 Microsoft微软翻译 Google翻译 百度翻译 腾讯翻译 DeepL翻译 ChatGPT翻译 AzureGPT翻译 Gemini Pro翻译 DeepLx翻译 OTT离线翻译 FreeGoogle翻译 FreeChatGPT翻译

FreeChatGPT翻译

这是由 apiskey.top 赞助的免费ChatGPT翻译接口,无需sk无需配置,选择即可使用,基于3.5模型

FreeGoogle翻译:这是一个反代google翻译的渠道,无需代理即可访问使用,但有请求次数限制,建议不会配置代理的小白用户使用,其他用户若想使用Google翻译,请填写网络代理地址

DeepL翻译: 这个翻译效果应该是最好的,甚至好过chatGPT,可惜国内无法购买付费版,而免费版难以api调用,DeepLx是一个白嫖deepl的工具,不过本地部署基本不可用,由于字幕数量很多,同时多线程翻译,很容易被封锁限制IP,可考虑在腾讯云上部署,降低出错率。

https://juejin.cn/user/4441682704623992/posts

Microsoft微软翻译:无需代理完全免费,但使用频繁还是有可能出现限制ip问题的。

Google翻译:如果你有代理,并且知道什么是代理,应该怎么填写代理,那么推荐首选 Google翻译,免费白嫖效果还杠杠的,只需要将 代理地址填写到文本框内即可。

腾讯翻译:如果你对代理一窍不通,那么就不要折腾了,去申请免费的腾讯翻译,点此查看腾讯翻译Api申请 每月的前 500 万字符免费.

百度翻译:你也可以去申请百度翻译Api,点此查看百度翻译Api申请 ,未完成认证的,每月5万免费字符,完成个人认证的每月有 100 万字符免费

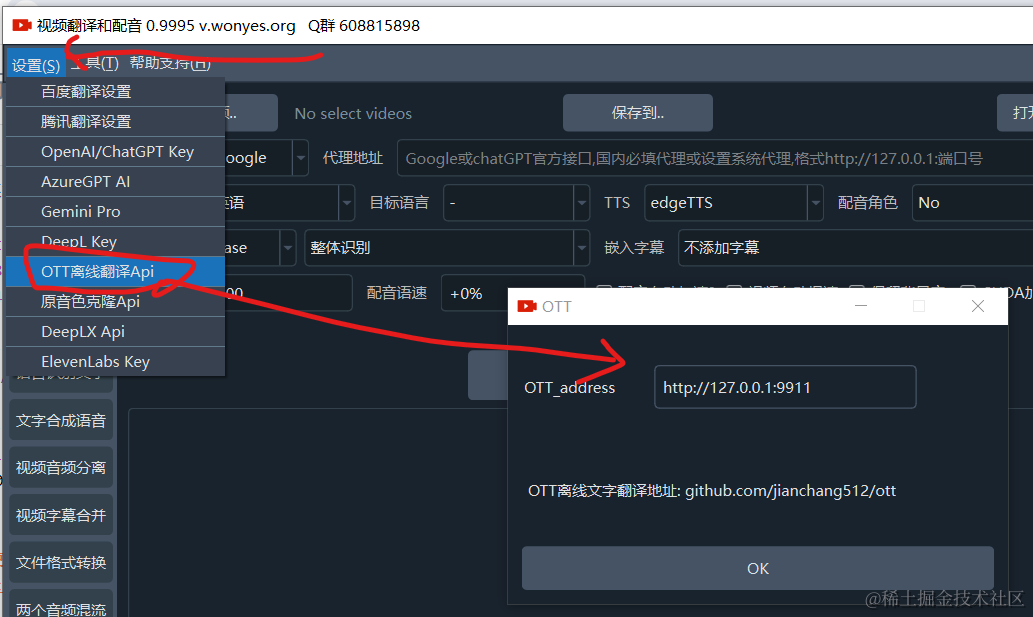

OTT离线翻译的使用:如果你愿意折腾,可以选择部署免费的OTT离线翻译,下载地址是 https://github.com/jianchang512/ott ,部署后,将地址填写在软件菜单-设置-OTT离线翻译中

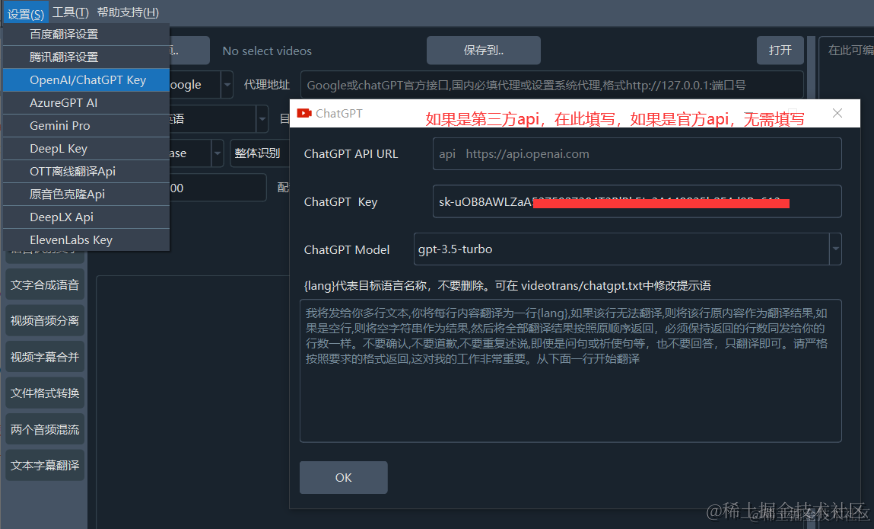

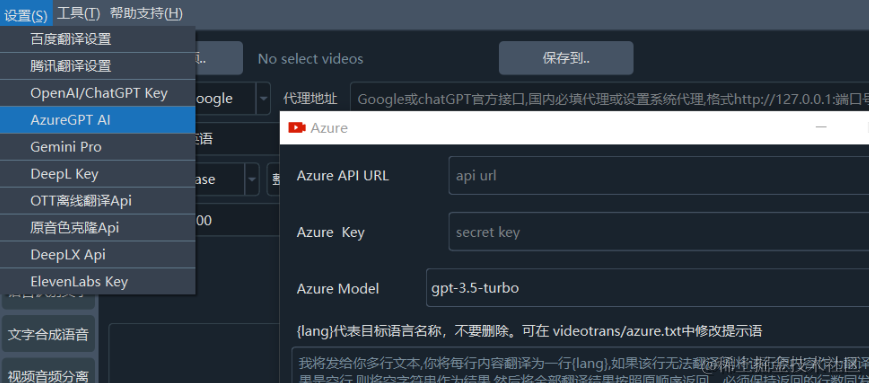

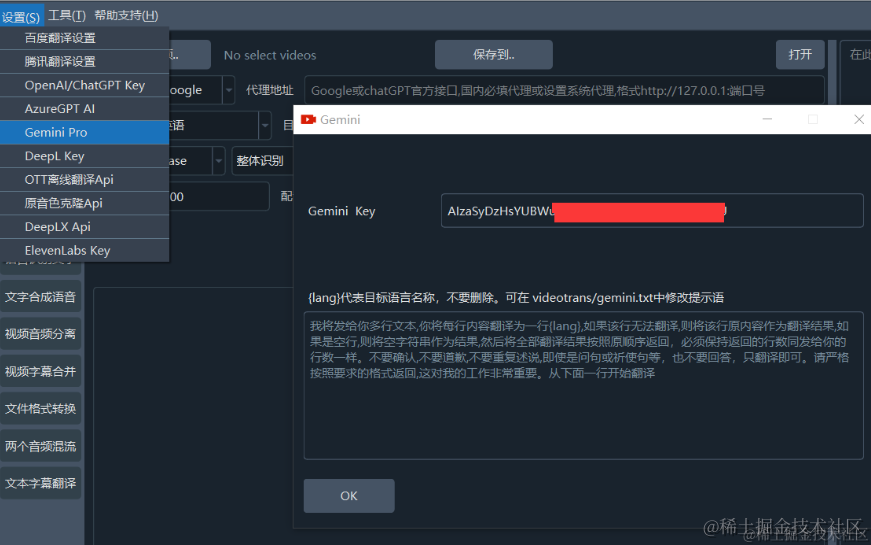

使用AI翻译 ChatGPT / Azure / Gemini:

ChatGPT和AzureGPT必须要有他们的付费账号,免费账号是不可用的,有了账号后,打开菜单-设置-OpenAI/chatGPT key 填写你的chatGPT的sk值,AzureGPT和Gemini同样在菜单-设置中填写

这里要注意,如果你使用的官方 chatGPT 的api,无需填写“API URL”,如果是第三方api,则填写第三方提供的api地址。

ChatGPT接入指南:快速获取与配置API密钥并填写到软件/工具中使用 https://juejin.cn/post/7342327642852999168

OpenAI官方的ChatGPT 和 Gemini/AzureGPT都必须要填写代理,否则无法访问

AzureGPT同样此处填写

Gemini目前是免费的,填写api key并且正确设置代理后可用

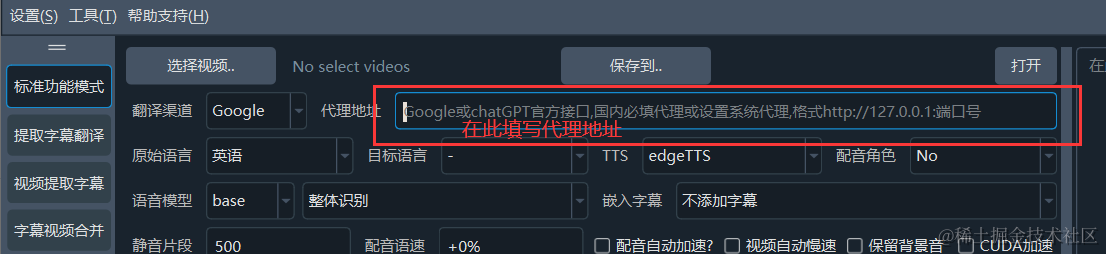

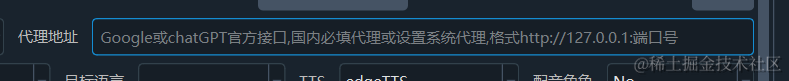

代理是什么,必须有吗

如果你要使用 Google翻译 或者使用 ChatGPT官方API 或者使用 Gemini/AzureGPT ,那么代理是必须的。你需要将这种格式的代理地址 http://127.0.0.1:端口号 填写在代理地址框中,请注意端口号必须是“http类型的端口,而不是sock的端口”。

比如你使用的是某软件,那么填写 http://127.0.0.1:10809 , 如果是某某软件,则填写 http://127.0.0.1:7890 ,如果你使用了代理但不知道填写什么,打开软件左下角或右上部分或者其他地方仔细寻找 http 字样后面跟着的4-5位数字号,然后填写 http://127.0.0.1:端口号

如果你压根不懂什么是代理,由于你知道的原因,不便多说,请自行百度。

请注意:代理地址用不到可以不填,但不要乱填,尤其是不要在此填写 api 地址

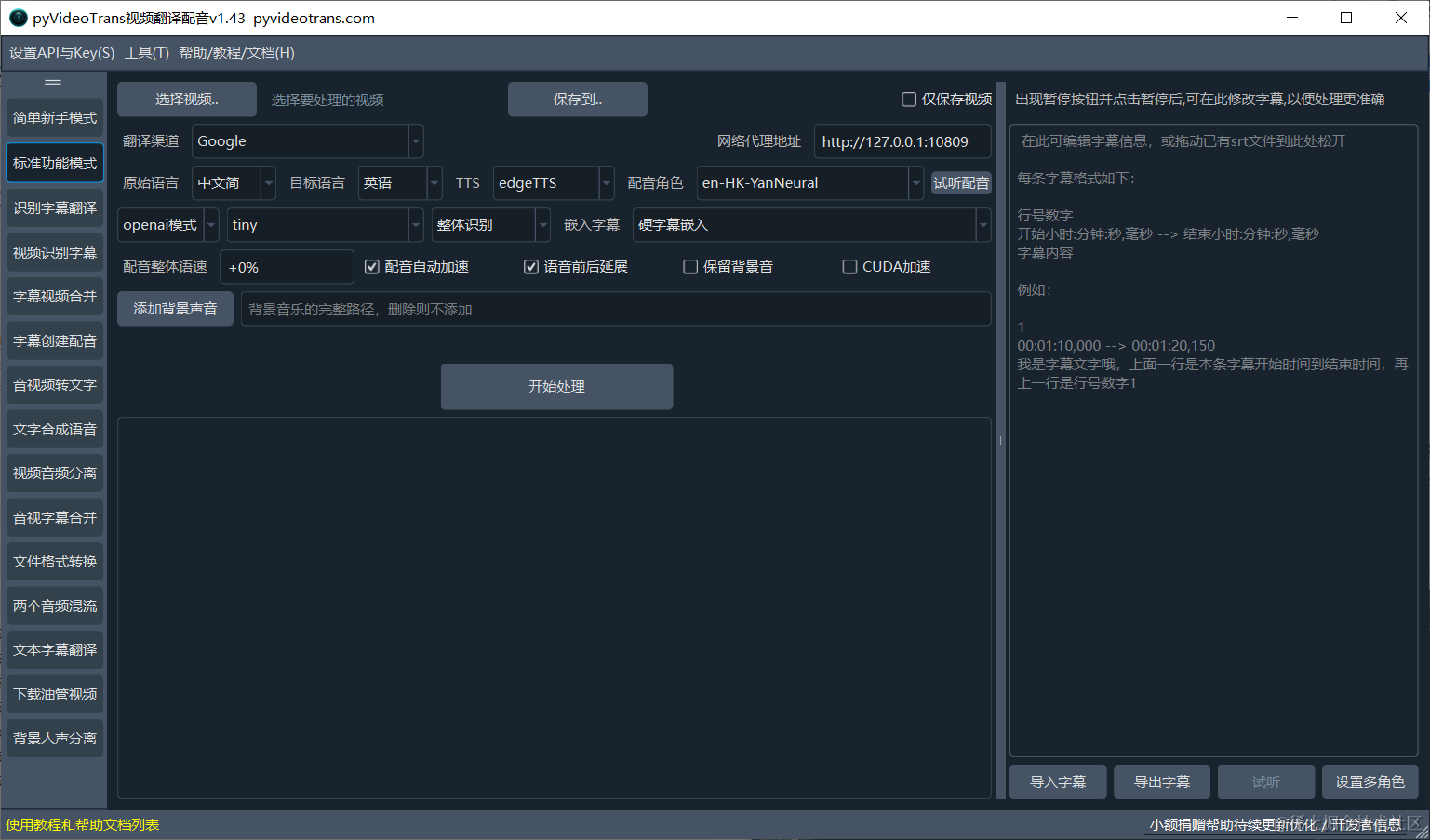

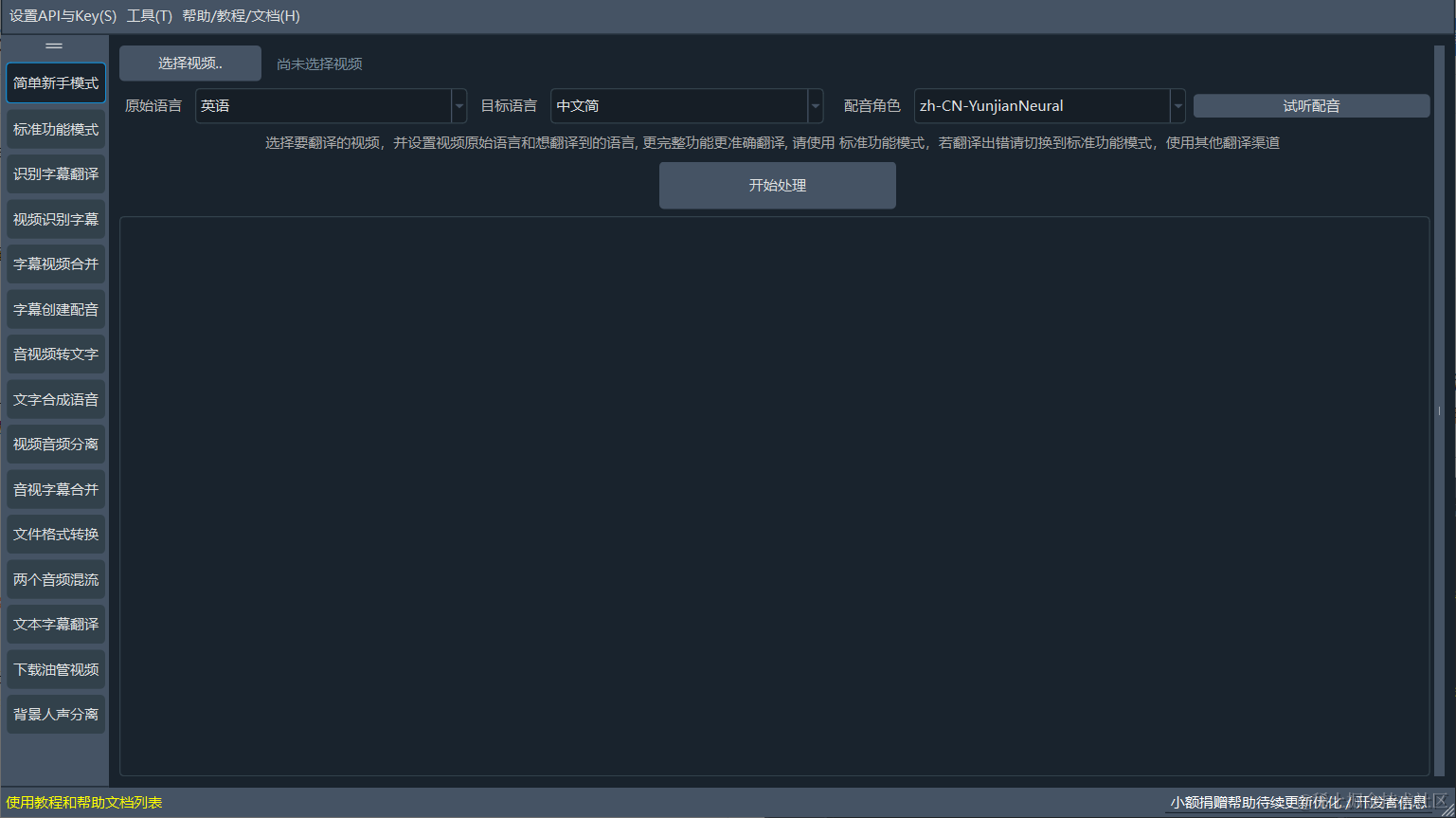

具体如何使用

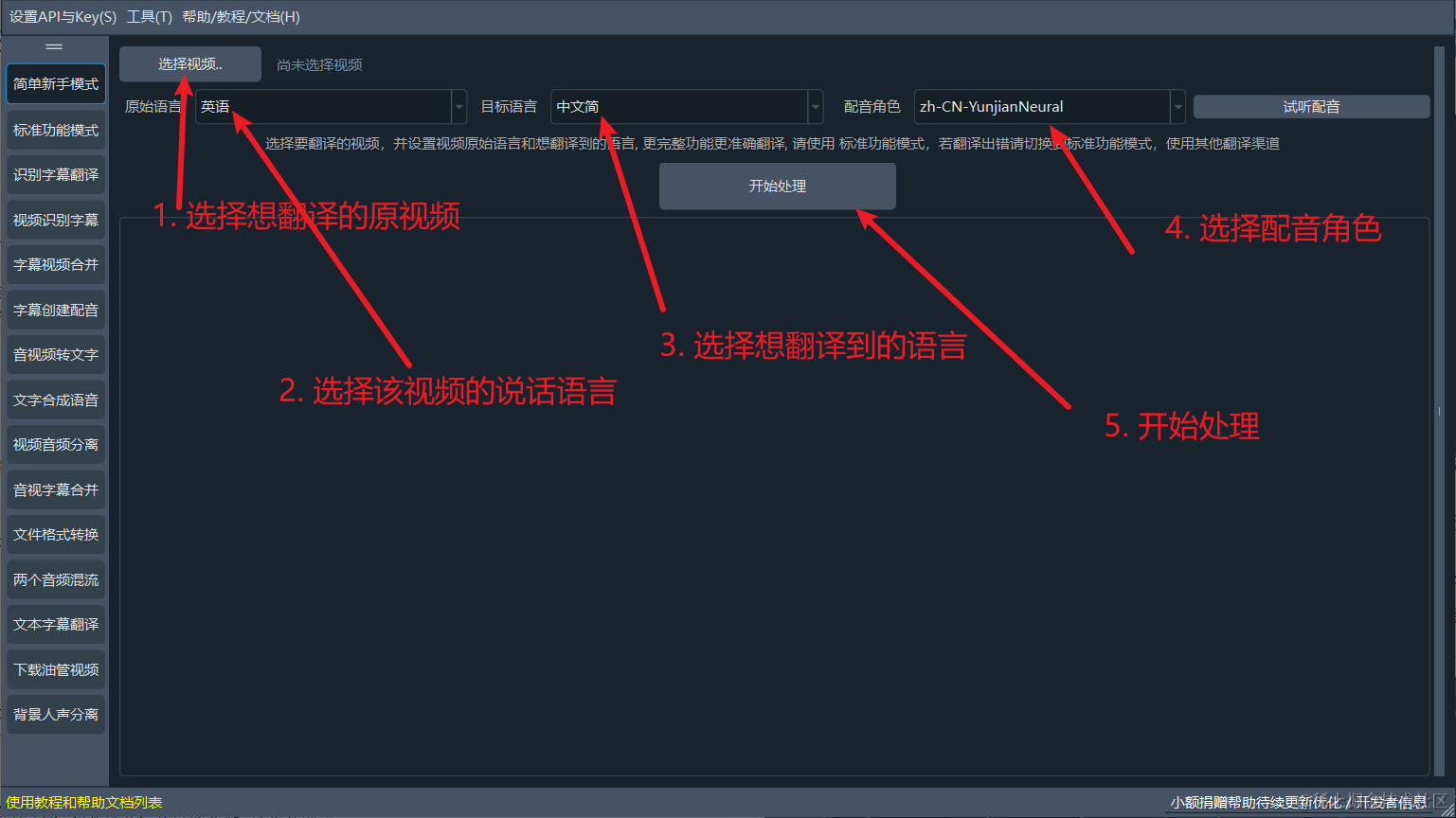

双击 sp.exe 打开软件,默认界面如下

默认左侧选中的第一个是简单新手模式,方便新手用户快速体验使用,大部分选项已默认设置。

当然你可以选择标准功能模式,实现高度自定义,完整的进行视频翻译+配音+嵌入字幕整个流程,左侧其他按钮其实都是该功能的拆分,或者其他简单辅助功能。就以简单新手模式演示下如何使用

如何使用CUDA加速

如果你有英伟达显卡,那么可以配置下CUDA环境,然后选中“CUDA加速”,将能得到极大的加速。如何配置呢,内容较多,请查看该教程

如何使用原视频中音色配音

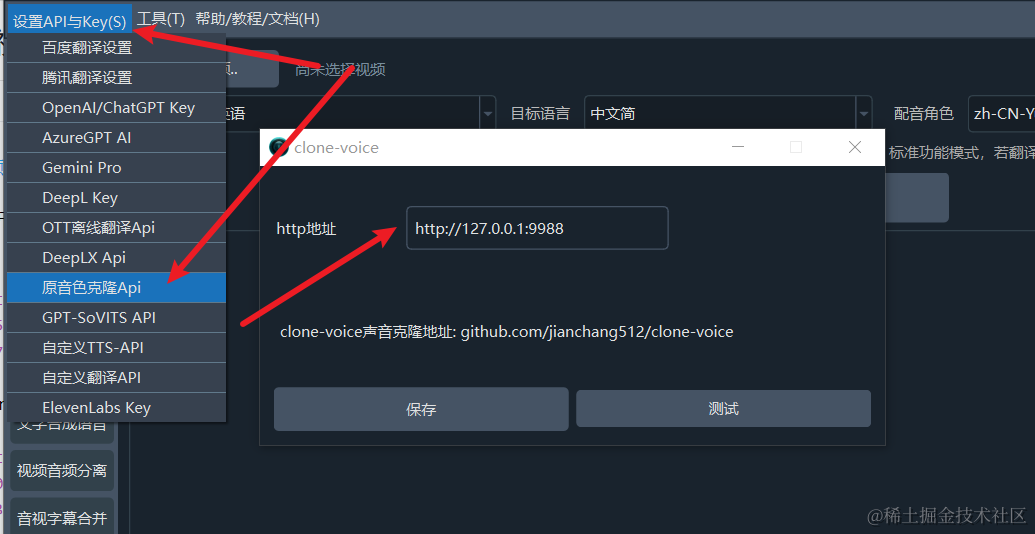

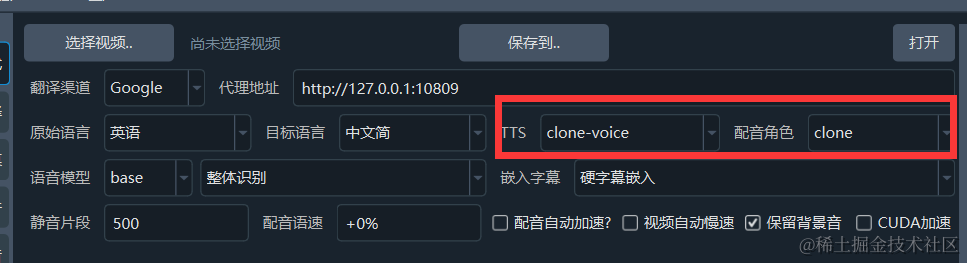

首先你需要另一个开源项目:clone-voice: https://github.com/jianchang512/clone-voice 安装部署并配置好模型后,将该项目的地址填写到软件的菜单-设置-原音色克隆Api 中

然后TTS选择 “clone-voice”,配音角色选择“clone”,即可使用

如何使用GPT-SoVIT配音

目前软件已支持使用GPT-SoVITS进行配音,部署好GPT-SoVITS后,启动api服务,然后将地址填写到 视频翻译软件 设置菜单-GPT-SOVITS 中即可。

具体可查看这2篇文章:

在其他软件中调用GPT-SoVITS将文字合成语音 https://juejin.cn/post/7341401110631350324

GPT-SoVITS项目的API改良与使用 https://juejin.cn/post/7343138052973297702

遇到问题怎么办

首先仔细阅读项目主页面 https://github.com/jianchang512/pyvideotrans 大部分问题都有说明哦。

其次可访问文档网站 https://pyvideotrans.com

再次如果还是无法解决,那就发Issue,去这里 https://github.com/jianchang512/pyvideotrans/issues 提交,当然在项目主页面 https://github.com/jianchang512/pyvideotrans 也有QQ群,可以加群。

建议关注我的微信公众号( pyvideotrans ),都是本人原创写作的该软件使用教程和常见问题,以及相关技巧,限于精力,该项目教程等只发在本掘金博客和微信公众号。GitHub和文档网站不会经常更新。

微信搜一搜中查找公众号: pyvideotrans

是否收费、有无限制

项目是GPL-v3协议开源的,免费使用,无任何内置收费项目,也没有任何限制(必须遵守我国法律),可以自由使用,当然 腾讯翻译、百度翻译、DeepL翻译、chatGPT、AzureGPT 他们是要收费的,但这雨我无瓜哦,他们也不给我分钱。

项目会死吗

没有不会死的项目,只有长寿和短命的项目,只靠爱发电的项目,可能夭折的更早,当然如果你希望它死的慢一点,活的久一点,在存活的过程中,能得到有效的持续维护和优化,可以考虑捐助呀,帮它续命几天。

是否可以使用该源码修改

源码完全公开,可本地部署,也可自行修改后使用,但注意该源码开源协议为GPL-v3,如果你集成了该源码到你的项目中,那么你的项目也必须开源,才不违反开源协议。