Free and open-source project. Documentation updates may lag due to limited resources. Please refer to the actual software interface.

This is a powerful open-source video translation/speech transcription/speech synthesis software dedicated to seamlessly converting videos from one language to another, including dubbed audio and subtitles.

Core Features at a Glance

- Fully Automatic Video/Audio Translation: Intelligently recognizes and transcribes speech in audio/video, generates source language subtitle files, translates them into target language subtitle files, performs dubbing, and finally merges the new audio and subtitles with the original video in one go.

- Speech Transcription/Audio/Video to Subtitles: Batch transcribe human speech in video or audio files into SRT subtitle files with accurate timestamps.

- Speech Synthesis/Text-to-Speech (TTS): Utilize various advanced TTS channels to generate high-quality, natural-sounding voiceovers for your text or SRT subtitle files.

- SRT Subtitle File Translation: Supports batch translation of SRT subtitle files, preserving original timestamps and formatting, and offers multiple bilingual subtitle styles.

- Script Alignment & Timing: Convert text scripts into precisely timed SRT subtitles based on audio/video and existing transcripts.

- Real-time Speech-to-Text: Supports real-time microphone listening and converts speech to text.

How the Software Works

Before you begin, it's crucial to understand the core workflow of this software:

First, the human speech in the audio or video is transcribed into a subtitle file via a [Speech Recognition Channel]. Then, this subtitle file is translated into the specified target language subtitle via a [Translation Channel]. Next, this translated subtitle is used to generate dubbed audio using the selected [Dubbing Channel]. Finally, the subtitles, audio, and original video are embedded and aligned to complete the video translation process.

- Can Process: Any audio/video containing human speech, regardless of whether it has embedded subtitles.

- Cannot Process: Videos containing only background music and hardcoded subtitles (burned-in text), but no human speech. This software also cannot directly extract hardcoded subtitles from the video frames.

Download & Installation

1.1 Windows Users (Pre-packaged Version)

We provide a ready-to-use pre-packaged version for Windows 10/11 users, requiring no complicated configuration. Download, extract, and run.

Click to download the Windows pre-packaged version, extract and run

Extraction Notes

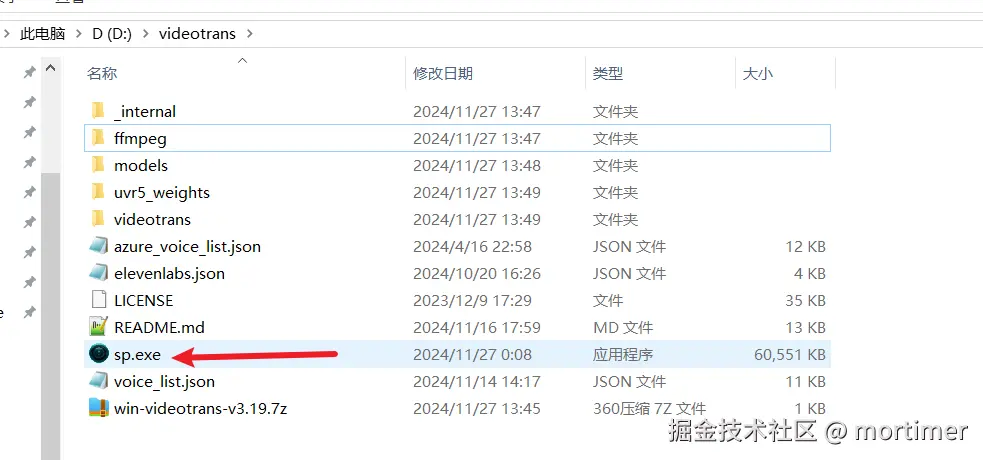

Do NOT double-click

sp.exedirectly inside the compressed archive to run it, as this will inevitably cause errors. Incorrect extraction is the most common reason for software startup failure. Please strictly follow these rules:

- Avoid Administrator Permission Paths: Do NOT extract to system folders requiring special permissions like

C:/Program Files,C:/Windows. - Highly Recommended: Create a new folder with only English letters or numbers (e.g.,

D:/videotrans) on a non-system drive like D: or E:, then extract the compressed archive into this folder.

Launching the Software

After extraction, navigate into the folder, find the sp.exe file, and double-click to run it.

The first launch requires loading many modules and may take tens of seconds. Please be patient.

1.2 MacOS / Linux Users (Source Code Deployment)

For MacOS and Linux users, deployment via source code is required.

- Source Code Repository: https://github.com/jianchang512/pyvideotrans

- Detailed Deployment Tutorials:

Software Interface & Core Functions

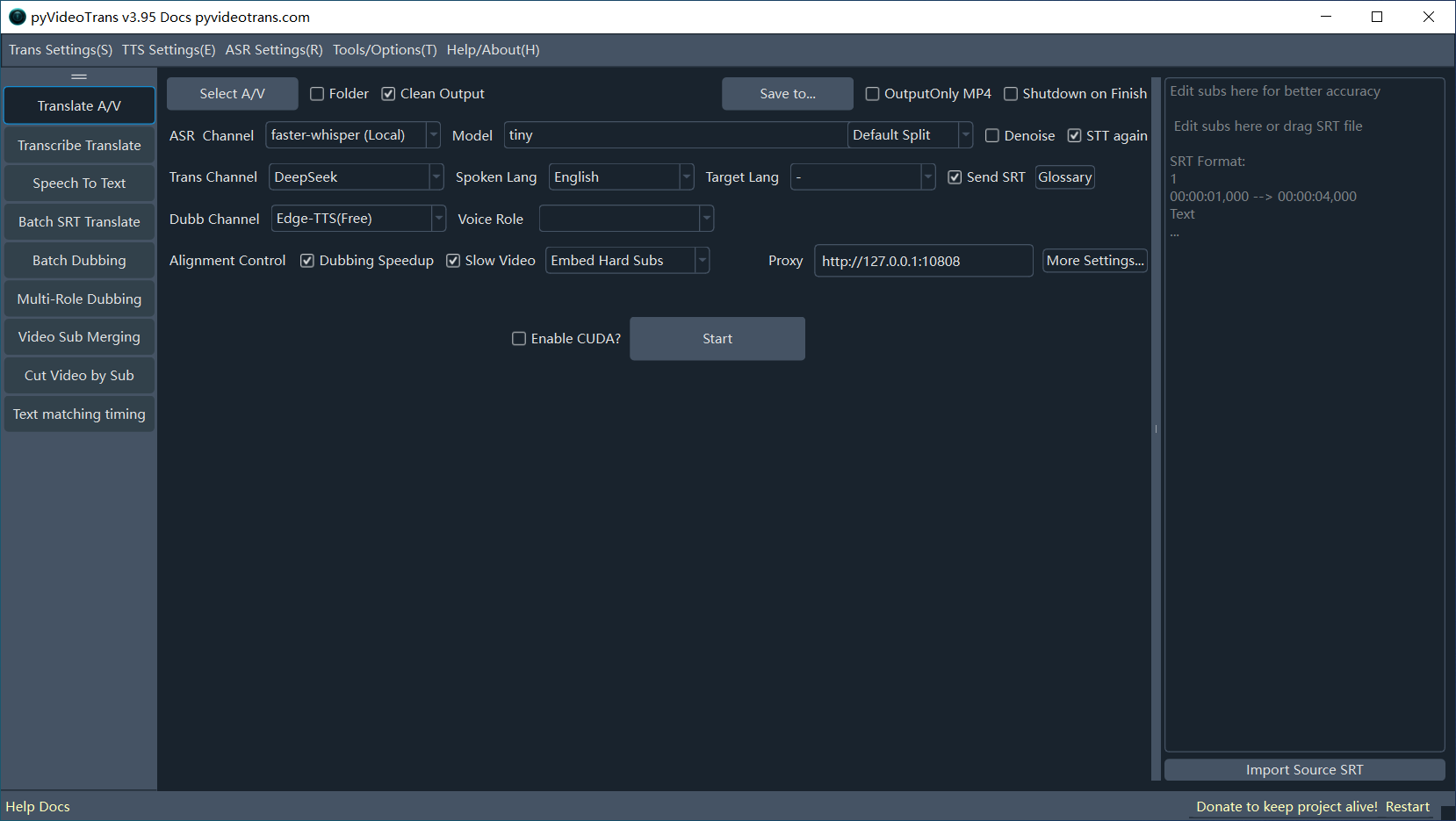

After launching the software, you will see the main interface below. Click Set More Parameters to display detailed configurations.

- Left Function Area: Switch between the software's main functional modules, such as Translate Video & Audio, Transcribe & Translate Subtitles, Audio/Video to Subtitles, Batch Translate SRT Subtitles, Batch Dubbing for Subtitles, Multi-role Dubbing for Subtitles, Audio/Video/Subtitle Merge, Clip Video by Subtitles, Script Matching, etc.

Top Menu Bar: For global configuration.

Translation Settings: Configure API Keys and related parameters for various translation channels (e.g., OpenAI, Azure, DeepSeek).

TTS Settings: Configure API Keys and related parameters for various dubbing channels (e.g., OpenAI TTS, Azure TTS).

Speech Recognition Settings: Configure API Keys and parameters for speech recognition channels (e.g., OpenAI API, Alibaba ASR).

Tools/Options: Contains various advanced options and auxiliary tools, such as subtitle format adjustment, video merging, voice separation, etc.

Help/About: View software version information, documentation, and community links.

Main Function: Translate Video and Audio

The software opens by default to the "Translate Video and Audio" workspace, which is its core function. The following will guide you step-by-step through a complete video/audio translation task.

Line 1: Select Video to Translate

Supported video formats:

mp4/mov/avi/mkv/webm/mpeg/ogg/mts/tsSupported audio formats:

wav/mp3/m4a/flac/aac

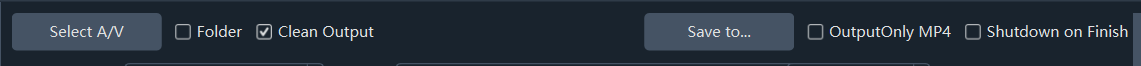

Select Audio or Video: Click this button to select one or multiple video/audio files for translation (holdCtrlfor multiple selection).Folder: Check this option to batch process all videos within an entire folder.Clean Generated: Check this if you need to re-process the same video (instead of using cache).Output to..: By default, translated files are saved to the_video_outfolder in the original video's directory. Click this button to set a separate output directory for translated videos.Shutdown After Completion: Automatically shuts down the computer after processing all tasks, suitable for large-scale, long-duration tasks.

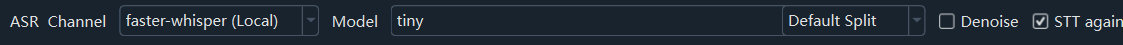

Line 2: Speech Recognition Channel

Speech Recognition: Used to transcribe speech in audio or video into subtitle files. The quality of this step directly determines subsequent results. Supports over ten different recognition methods.faster-whisper(local): This is a local model (requires online download on first run), offering good speed and quality. If you have no special needs, you can choose it. It has over ten models of different sizes. The smallest, fastest, and most resource-efficient model istiny, but its accuracy is very low and not recommended. The best-performing models arelarge-v2/large-v3. It is recommended to choose them. Models ending in.enand starting withdistil-only support English-speaking videos.openai-whisper(local): Similar to the above model but somewhat slower. Accuracy might be slightly higher. Also recommended to chooselarge-v2/large-v3models.Alibaba FunASR(local): Alibaba's local recognition model, with good support for Chinese. If your original video contains Chinese speech, you can try using it. Also requires online model download on first run.Default Segmentation | LLM Segmentation: You can choose default segmentation or use a Large Language Model (LLM) for intelligent segmentation and punctuation optimization of the recognized text.Secondary Recognition: When dubbing is selected and "Embed Single Subtitle" is chosen, selecting this will perform speech recognition again on the dubbed audio file after dubbing is complete, generating relatively concise subtitles to embed in the video, ensuring precise alignment between subtitles and dubbing.Additionally, it supports various online APIs and local models like ByteDance Volcano Subtitle Generation, OpenAI Speech Recognition, Gemini Speech Recognition, Alibaba Qwen3-ASR Speech Recognition, etc.

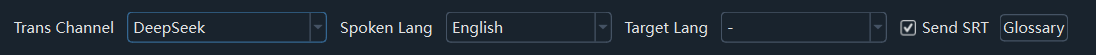

Line 3: Translation Channel

Translation Channel: The translation channel is used to translate the transcribed original language subtitle file into the target language subtitle file. It includes over a dozen built-in translation channels.

- Free Traditional Translation: Google Translate (requires proxy), Microsoft Translator (no proxy needed), DeepLX (requires self-deployment).

- Paid Traditional Translation: Baidu Translate, Tencent Translate, Alibaba Machine Translation, DeepL.

- AI Intelligent Translation: OpenAI ChatGPT, Gemini, DeepSeek, Claude, Zhipu AI, Silicon Flow, 302.AI, etc. Requires your own SK keys to be filled in the corresponding channel settings panel under

Menu - Translation Settings. - Compatible AI/Local Model: Also supports self-deployed local large models. Just choose the "Compatible AI/Local Model" channel and fill in the API address in

Menu - Translation Settings - Local Large Model Settings. - Source Language: Refers to the language spoken by people in the original video. Must be selected correctly. If unsure, choose

auto. - Target Language: The language you want the audio/video to be translated into.

- Translation Glossary: Used for AI translation, sending a glossary to the AI.

- Send Complete Subtitle: Used for AI translation, sending the subtitle with line numbers and timestamps to the AI.

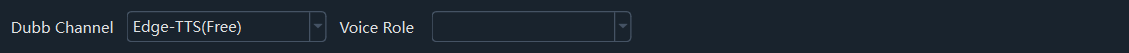

Line 4: Dubbing Channel

Dubbing Channel: The translated subtitle file will be dubbed using the channel specified here. Supports online dubbing APIs such as OpenAI TTS / Alibaba Qwen-TTS / Edge-TTS / Elevenlabs / ByteDance Volcano Speech Synthesis / Azure-TTS / Minimaxi, etc. Also supports locally deployed open-source TTS models like IndexTTS2 / F5-TTS / CosyVoice / ChatterBox / VoxCPM, etc. Among them, Edge-TTS is a free dubbing channel, ready to use out of the box. Some channels requiring configuration need relevant information filled in underMenu -- TTS Settings -- Corresponding Channel Panel.

- Voice Role: Each dubbing channel generally has multiple voice actors to choose from. After selecting the Target Language, you can then select the voice role.

- Preview Dubbing: After selecting a voice role, click this to preview the sound effect of the current role.

Line 5: Synchronization, Alignment & Subtitles

Due to different speaking speeds in different languages, the dubbed audio duration may not match the original video. Adjustments can be made here. Primarily adjusts for situations where the dubbed duration is longer than the original to avoid overlapping sounds or video ending before audio. No processing is done for situations where dubbed duration becomes shorter.

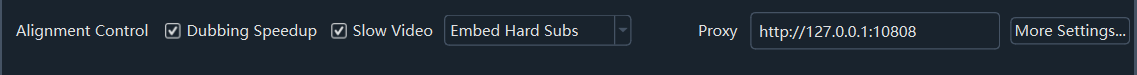

Speed Up Dubbing: If a dubbed segment is longer than the original audio segment, speed up the dubbing to match the original duration.Slow Down Video: Similarly, when a dubbed segment is longer than the video, slow down the playback speed of that video segment to match the dubbing duration. (If selected, processing will be time-consuming, generating many intermediate segments. To minimize quality loss, the overall file size will be several times larger than the original video.)Do Not Embed Subtitles: Only replace the audio, do not add any subtitles.Embed Hard Subtitles: Permanently "burn" subtitles into the video frames. Cannot be turned off; subtitles will be displayed when played anywhere.Embed Soft Subtitles: Embed subtitles as an independent track into the video. Players can choose to turn them on/off. Subtitles cannot be displayed when played on web pages.(Dual): Each subtitle consists of two lines: the original language subtitle and the target language subtitle.- Network Proxy: For users in Mainland China, using foreign services like Google, Gemini, OpenAI, etc., requires a proxy. If you have VPN services and know the proxy port number, you can fill it in here, in a form like

http://127.0.0.1:7860.

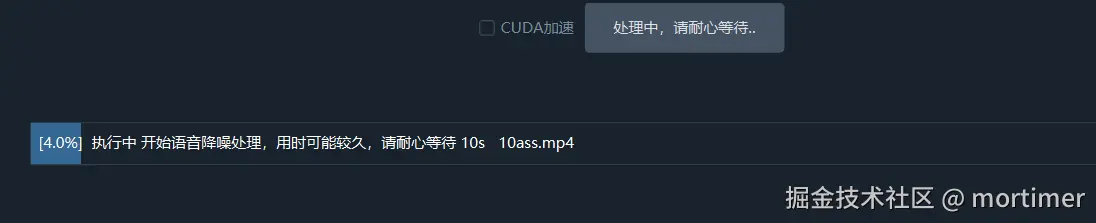

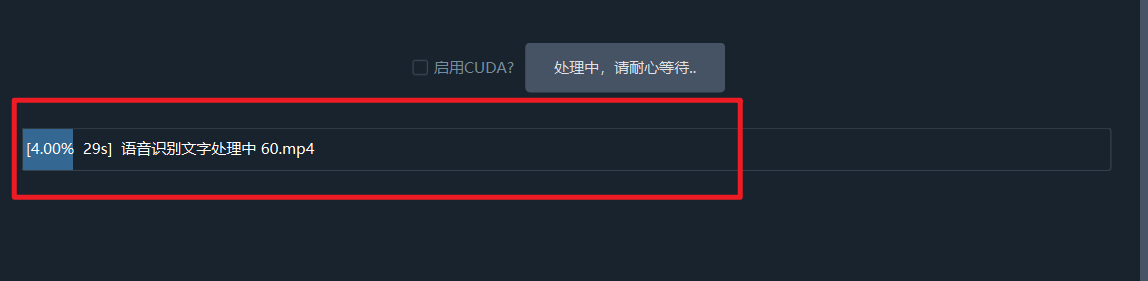

Line 6: Start Execution

- CUDA Acceleration: On Windows and Linux, if you have an NVIDIA graphics card and have correctly installed the CUDA environment, be sure to check this option. It can increase speech recognition speed by several times or even dozens of times.

If you have multiple NVIDIA graphics cards, you can open

Menu -- Tools -- Advanced Options -- General Settings -- Select 'Multi-GPU Mode', which will attempt to use multi-GPU parallel processing.

After all settings are complete, click the [Start Execution] button.

If multiple audio/videos are selected for translation at once, they will be executed concurrently and crosswise without pausing in between.

When only one video is selected at a time, after speech transcription is complete, a separate subtitle editing window will pop up. You can modify the subtitles here for more accurate subsequent processing.

1st Modification Opportunity: After the speech recognition stage completes, a subtitle editing window pops up.In the window that pops up after subtitle translation is complete, you can set different voice roles for each speaker and even specify a unique voice role for each subtitle line.

2nd Modification Opportunity: After the subtitle translation stage completes, a window for modifying subtitles and voice roles pops up.

3rd Modification Opportunity: After dubbing is complete, you can check again or re-dub each subtitle line.

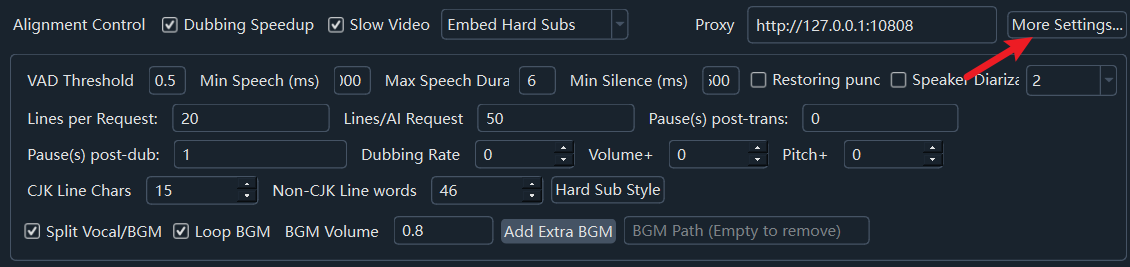

Line 7: Set More Parameters

If you want more fine-grained control, such as speech rate, volume, characters per subtitle line, noise reduction, speaker recognition, etc., click Set More Parameters.... After clicking, it looks like this:

- Noise Reduction: If selected, will download Alibaba's model from modelscope.cn online before speech recognition to eliminate noise from the audio, improving recognition accuracy.

- Recognize Speaker: If selected, will attempt to identify and distinguish speakers after speech recognition ends (accuracy is limited). The following number represents the preset number of speakers to identify. If known in advance, it increases accuracy. Default is unlimited. In Advanced Options, you can switch speaker models (built-in, Alibaba cam++, payanote, etc.).

- Dubbing Speech Rate: Default 0. If set to

50, it means the speech rate increases by 50%.-50means decreases by 50%. - Volume +: Also default 0. Setting to

50means volume increases by 50%.-50means decreases by 50%. - Pitch +: Default 0.

20means pitch increases by 20Hz, becoming sharper. Conversely,-20decreases by 20Hz, becoming deeper. - Speech Threshold: Represents the minimum probability for an audio segment to be considered speech. VAD calculates a speech probability for each audio segment. Parts exceeding this threshold are considered speech; otherwise, considered silence or noise. Default 0.5. Lower values are more sensitive but may mistake noise for speech.

- Minimum Speech Duration (ms): Limits the minimum duration of a speech segment. If you selected voice cloning, keep this value >=3000.

- Maximum Speech Duration (s): Limits the maximum length of a single speech segment. Forces segmentation when exceeding this duration. Enter a number, unit is seconds. Default 6 seconds. Do not exceed 30 seconds.

- Silence Split Duration (ms): At the end of speech, waits for silence time to reach this value before segmenting the speech segment. Enter a number, unit ms. Default 500ms, meaning segmentation only occurs at silence segments greater than this value.

- Traditional Translation Channel Lines per Batch: Number of subtitle lines sent per batch for traditional translation channels.

- AI Translation Channel Lines per Batch: Number of subtitle lines sent per batch for AI translation channels.

- Send Complete Subtitle: Whether to send complete subtitle format content when using AI translation channels.

- Pause After Translation (s): Pause seconds after each translation, used to limit request frequency.

- Pause After Dubbing (s): Pause seconds after each dubbing, used to limit request frequency.

- CJK Single Line Characters: When embedding subtitles in video, the maximum number of characters per line for Chinese, Japanese, and Korean languages.

- Other Languages Single Line Characters: When embedding subtitles in video, the maximum number of characters per line for non-CJK languages.

- Modify Hard Subtitle Style: Click to pop up a dedicated hard subtitle style editor.

- Separate Voice & Background: If selected, will separate background music/accompaniment and speech from the video. When final dubbing merge is completed, the background accompaniment will be embedded back in (this step is relatively slow).

- Loop Background Audio: If the background audio duration is shorter than the final video duration, selecting this will loop the background audio; otherwise, fill with silence.

- Background Volume: The volume setting for the newly embedded background audio. Default 0.8, meaning volume is reduced to 0.8 times the original.

- Add Extra Background Audio: You can also choose a local audio file as a new background accompaniment.

- Restore Punctuation: If selected, will attempt to add punctuation marks after recognition.

Line 8: Progress Bar

After the task is completed, click the bottom progress bar area to open the output folder. You will see the final MP4 file and materials generated during the process, such as SRT subtitles and dubbed audio files.

From the above and the software's working principle, it can be seen that the most important are the three channels: Speech Recognition Channel, Translation Channel, and Dubbing Channel.

Speech Recognition Channel Introduction

This channel's function is to convert human speech in audio/video into SRT subtitle files. Supports the following speech recognition channels:

- faster-whisper Local Mode

- openai-whisper Local Mode

- Alibaba FunASR Chinese Recognition

- Huggingface_ASR

- Google Speech Recognition

- ByteDance Volcano Subtitle Generation

- ByteDance Speech Recognition Large Model Fast Version

- OpenAI Speech Recognition

- Elevenlabs.io Speech Recognition

- Parakeet-tdt Speech Recognition

- STT Speech Recognition API

- Custom Speech Recognition API

- Gemini Large Model Recognition

- Alibaba Bailian Qwen3-ASR

- deepgram.com Speech Recognition

- faster-whisper-xxl.exe Speech Recognition

- Whisper.cpp Speech Recognition

- Whisperx-api Speech Recognition

- 302.AI Speech Recognition

Translation Channel Introduction

The translation channel is used to translate the original subtitles generated by the

Speech Recognition Channelinto target language subtitles, e.g., Chinese subtitles translated into English subtitles or vice versa.

Translation results have blank lines or are missing many lines

Cause Analysis: When using traditional translation channels like Baidu Translate, Tencent Translate, etc., or when using AI translation but not selecting "Send Complete Subtitle", the subtitle text is sent line by line to the translation engine, expecting the same number of lines in the returned result. If the translation engine returns a different number of lines than sent, blank lines will appear.

Solution: How to completely avoid these two situations? Avoid using local small models, especially 7b, 14b, 32b, etc. If you must use them, it is recommended to change the "Simultaneous translation subtitle count" to trans_thread=1 and deselect "Send Complete Subtitle". Open Menu -- Tools -- Advanced Settings and change the "Simultaneous translation subtitle count" to trans_thread=1. However, this method is obviously slower and cannot consider context, resulting in poorer quality.

Use more intelligent online AI large models, such as gemini/deepseek online APIs, etc.

- Using AI translation, prompt words appear in the results

When using AI translation channels, the translation result outputs the prompt words as well. This situation is more common with locally deployed small models, such as 14b, 32b, etc. The root cause is the model's scale is too small, lacking sufficient intelligence to strictly follow instructions.

Dubbing Channel Introduction

Used to dub line by line based on subtitle files. Supports the following dubbing channels:

You can set the number of simultaneous dubbing tasks in

Menu -- Tools/Options -- Advanced Options -- Dubbing Thread Count. Default is 1. If you want faster dubbing speed, you can increase this value, e.g., set to 5, meaning 5 dubbing tasks are initiated simultaneously, but may exceed the dubbing service API rate limit and fail.

How to perform voice cloning?

You can choose F5-TTS/index-tts/clone-voice/CosyVoice/GPT-SOVITS/Chatterbox, etc., in the dubbing channel, select the clone role, and it will use the original voice as a reference audio for dubbing, obtaining dubbing with the original voice timbre.

Note: The reference audio generally requires a duration of 3-10s and should be free of background noise with clear pronunciation; otherwise, the cloning effect will be poor. When using this function, please set the Minimum Speech Duration (ms) to 3000-4000.

Advanced Options Explanation

Menu - Tools - Advanced Options contains more fine-grained controls for personalized adjustments.

Click to view specific parameters and usage instructions for Advanced Options