Explanation of Advanced Settings Options

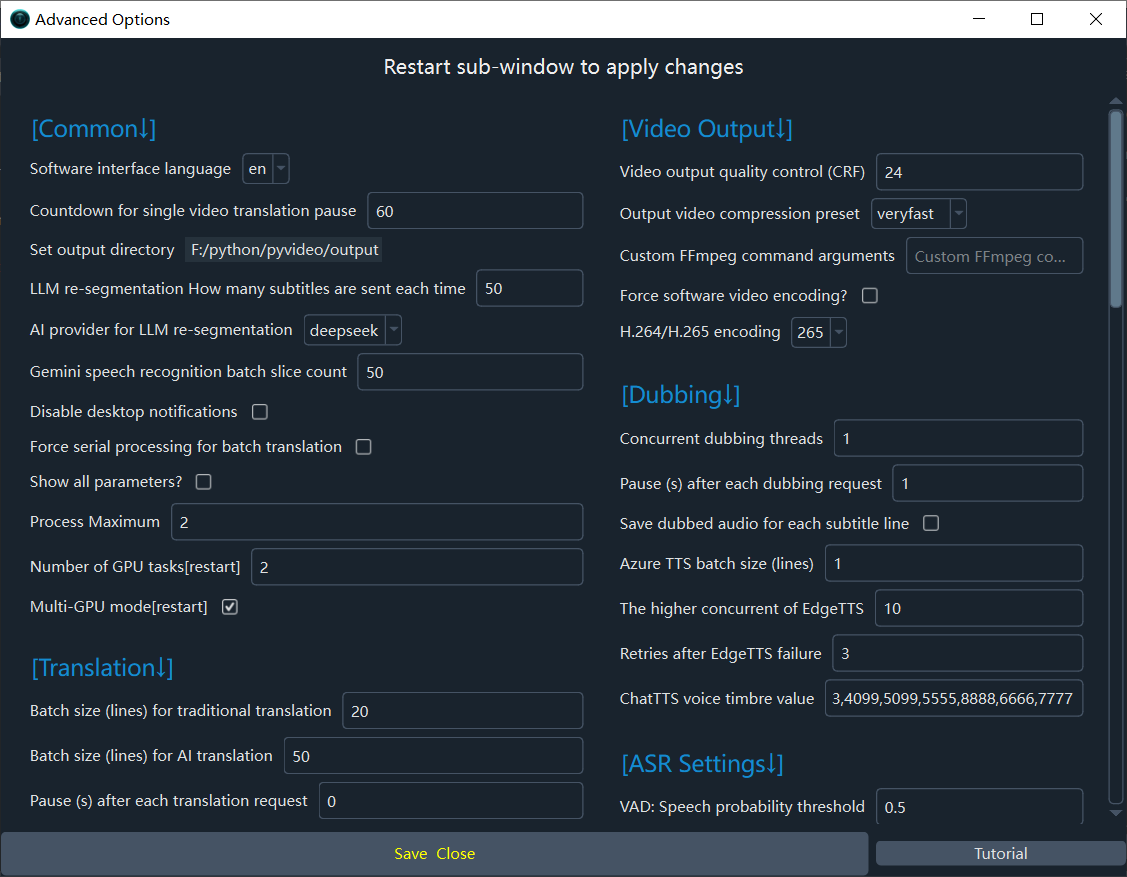

You can customize various parameters in the top menu under Tools/Options -> Advanced Options for finer control. See the image below.

【Common】

Software interface language: Set the software's interface language. Requires a restart to take effect.Countdown for single video translation pause: Countdown in seconds for a single video translation.Set output directory: Directory to save results (video separation, subtitles, dubbing). Defaults to the 'output' folder.LLM re-segmentation How many subtitles are sent each time: When re-segmenting sentences in the LLM large model, the number of subtitles sent each time is important. A larger value results in better sentence segmentation. Sending all subtitles at once is optimal, but this is limited by the maximum output token and context (max_token). An excessively long input may exceed the AI limit and fail. The default is 20 subtitles.AI provider for LLM re-segmentation: AI provider for LLM re-segmentation, supports 'openai' or 'deepseek'.Gemini speech recognition batch slice count: Number of audio slices per request for Gemini recognition. Larger values improve accuracy but increase failure rate.Disable desktop notifications: Disable desktop notifications for task completion or failure.Force serial processing for batch translation: Process batch translation sequentially (one by one) instead of in parallel.Show all parameters?: To avoid confusion caused by too many parameters, most parameters are hidden by default on the main interface. Selecting this option will switch to displaying all parameters by default.Process Maximum: Process MaximumNumber of GPU tasks[restart]: The number of GPU tasks that can be executed simultaneously should be set to 1 unless the video memory is greater than 20GB.Multi-GPU mode[restart]: If you have multiple graphics cards with identical video memory, you can enable this option and set the above option to 2 or the number of graphics cards.

【Video Output】

Video output quality control (CRF): Constant Rate Factor (CRF) for video quality. 0=lossless (huge file), 51=low quality (small file). Default: 23 (balanced).Output video compression preset: Controls the encoding speed vs. quality balance (e.g., ultrafast, medium, slow). Faster means larger files.Custom FFmpeg command arguments: Custom FFmpeg command arguments, added before the output file argument.Force software video encoding?: Force software encoding (slower but more compatible). Hardware encoding is preferred by default.H.264/H.265 encoding: Video codec: libx264 (better compatibility) or libx265 (higher compression).

【Translation】

Batch size (lines) for traditional translation: Number of subtitle lines per request for traditional translation.Batch size (lines) for AI translation: Number of subtitle lines per request for AI translation.Pause (s) after each translation request: Delay (in seconds) between translation requests to prevent rate-limiting.Send full SRT format for AI translation: Send full SRT format content when using AI translation.AI temperature for translation subtitles: AI models temperature,default is 0.2AI translation with full original subtitles: The inclusion of complete original subtitles as AI context information will result in higher translation quality. [Important Note] 1. An advanced model supporting extremely long contexts must be used.

- Token consumption will increase several times.

【Dubbing】

Concurrent dubbing threads: Number of concurrent threads for dubbing.Pause (s) after each dubbing request: Delay (in seconds) between dubbing requests to prevent rate-limiting.Save dubbed audio for each subtitle line: Save the dubbed audio for each individual subtitle line.Azure TTS batch size (lines): Number of lines per batch request for Azure TTS.The higher concurrent of EdgeTTS: The higher the concurrent voice-over capacity of the EdgeTTS channel, the faster the speed, but rate throttling may fail.Retries after EdgeTTS failure: Number of retries after EdgeTTS channel failureChatTTS voice timbre value: ChatTTS voice timbre value.

【Alignment】

Maximum audio speed-up rate: Maximum audio speed-up rate. Default: 100.Maximum video slow-down rate: Maximum video slow-down rate. Default: 10 (cannot exceed 10).Number of characters per line for CJK: Number of characters per line for Chinese, Japanese, and Korean subtitles; more than this will result in a line breakNumber of words per line for Other: Number of words per line for subtitles in other languages; more than this will result in a line break

【ASR Settings】

VAD: Speech probability threshold: VAD: Minimum probability for an audio chunk to be considered speech. Default: 0.5.VAD: Max speech duration(s): VAD: Maximum duration (s) of a single speech segment before splitting. Default: 8s.VAD: Min silence duration for split(ms): VAD: Minimum silence duration (ms) to mark the end of a segment. Default: 500ms.Merge sub into adjacent sub if less than: If a subtitle's duration is less than this value in milliseconds, attempt to merge it into an adjacent subtitle. Default is 1000msno speech threshold: no speech thresholdtemperature: temperaturerepetition penalty: Increasing this value helps reduce repetitionscompression ratio threshold: Decrease this value helps reduce repetitionshotwords: hotwordsShort sub will be merged if selected: Short subtitles will only be merged if this option is selectedBatch size for Faster-Whisper model recognition: Batch size for Faster-Whisper model recognition, larger values are faster but require more GPU memory, too large a size may cause GPU memory to be exhaustedSelect VAD: Select VADModel for speaker separation: The model used for speaker separation. The default is the built-in model, supporting both Chinese and English. Pyannote is optional. If selected, you must have a token from https://huggingface.co and agree to the Pyannote licensing agreement. For details, please visit the URL for a tutorial: https://pvt9.com/shuohuarenYour token from huggingface.co: Enter your token from huggingface.co. Otherwise, you cannot use Pyannote speaker separation. For details, please see the tutorial: https://pvt9.com/shuohuarenfaster/whisper models: Comma-separated list of model names for faster-whisper and OpenAI modes.whisper.cpp models: Comma-separated list of model names for whisper.cpp mode.CUDA compute type: CUDA compute type for faster-whisper (e.g., int8, float16, float32, default).Recognition accuracy (beam_size): Beam size for transcription (1-5). Higher is more accurate but uses more VRAM.Recognition accuracy (best_of): Best-of for transcription (1-5). Higher is more accurate but uses more VRAM.Enable context awareness: Condition on previous text for better context (uses more GPU, may cause repetition).Threads nums for separation: The more threads used for separation of human and background voices, the faster the process, but the more resources it consumes.Convert Traditional to Simplified Chinese subtitles: Force conversion of recognized Traditional Chinese to Simplified Chinese.

【Whisper Prompt】

initial prompt for Simplified Chinese: Initial prompt for the Whisper model for Simplified Chinese speech.initial prompt for Traditional Chinese: Initial prompt for the Whisper model for Traditional Chinese speech.initial prompt for English: Initial prompt for the Whisper model for English speech.initial prompt for French: Initial prompt for the Whisper model for French speech.initial prompt for German: Initial prompt for the Whisper model for German speech.initial prompt for Japanese: Initial prompt for the Whisper model for Japanese speech.initial prompt for Korean: Initial prompt for the Whisper model for Korean speech.initial prompt for Russian: Initial prompt for the Whisper model for Russian speech.initial prompt for Spanish: Initial prompt for the Whisper model for Spanish speech.initial prompt for Thai: Initial prompt for the Whisper model for Thai speech.initial prompt for Italian: Initial prompt for the Whisper model for Italian speech.initial prompt for Portuguese: Initial prompt for the Whisper model for Portuguese speech.initial prompt for Vietnamese: Initial prompt for the Whisper model for Vietnamese speech.initial prompt for Arabic: Initial prompt for the Whisper model for Arabic speech.initial prompt for Turkish: Initial prompt for the Whisper model for Turkish speech.initial prompt for Hindi: Initial prompt for the Whisper model for Hindi speech.initial prompt for Hungarian: Initial prompt for the Whisper model for Hungarian speech.initial prompt for Ukrainian: Initial prompt for the Whisper model for Ukrainian speech.initial prompt for Indonesian: Initial prompt for the Whisper model for Indonesian speech.initial prompt for Malay: Initial prompt for the Whisper model for Malaysian speech.initial prompt for Kazakh: Initial prompt for the Whisper model for Kazakh speech.initial prompt for Czech: Initial prompt for the Whisper model for Czech speech.initial prompt for Polish: Initial prompt for the Whisper model for Polish speech.initial prompt for Dutch: Initial prompt for the Whisper model for Dutch speech.initial prompt for Swedish: Initial prompt for the Whisper model for Swedish speech.initial prompt for Hebrew: Initial prompt for the Whisper model for Hebrew speech.initial prompt for Bengali: Initial prompt for the Whisper model for Bengali speech.initial prompt for Persian: Initial prompt for the Whisper model for Persian speech.initial prompt for Urdu: Initial prompt for the Whisper model for Urdu speech.initial prompt for Cantonese: Initial prompt for the Whisper model for Cantonese speech.initial prompt for Filipino: Initial prompt for the Whisper model for Filipino speech.