Adjusting VAD Parameters in Speech Recognition

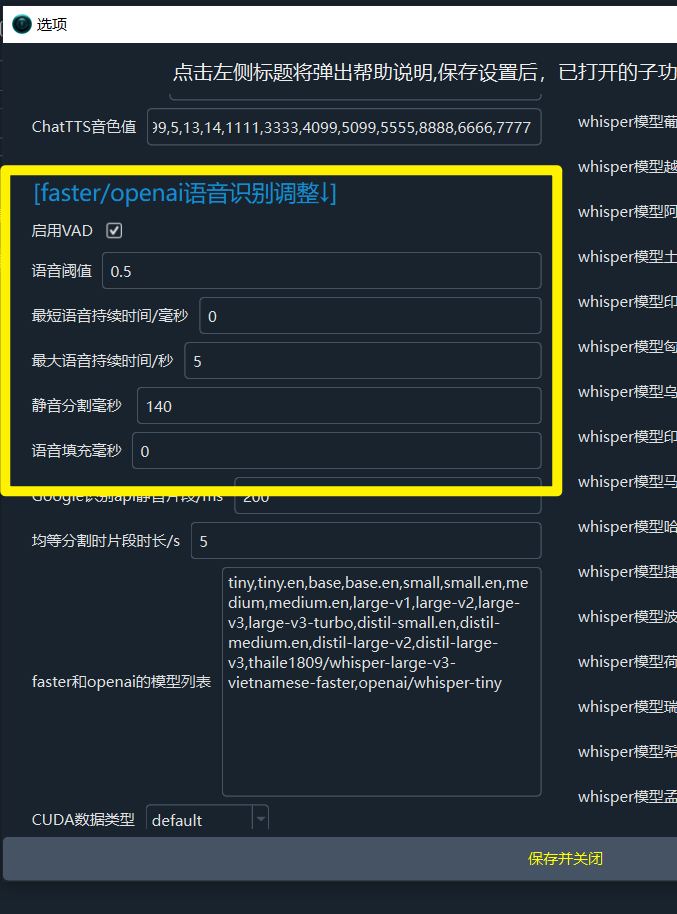

During the speech recognition stage of video translation, the generated subtitles can sometimes be excessively long (tens of seconds or even minutes) or too short (less than 1 second). By adjusting VAD (Voice Activity Detection) parameters, these issues can be optimized to make the subtitles better match the actual speech content.

What is VAD?

VAD is a voice activity detection tool used to identify speech segments in audio and separate them from silence or noise. It can be used in conjunction with speech recognition tools (such as Whisper) to detect and segment speech fragments before and after recognition, thereby improving recognition effectiveness.

After version 3.92, the default VAD model is ten-vad. You can manually switch to silero in the menu: Tools -> Advanced Options.

Parameter Details and Adjustment Suggestions

threshold (Speech Probability Threshold)

Function: Represents the minimum probability for an audio segment to be considered speech. Silero VAD calculates a speech probability for each audio segment. Parts exceeding this threshold are considered speech, while parts below it are considered silence or noise.

Adjustment Suggestion: The default value is 0.5, which is suitable for most situations. If there are many false positives (e.g., noise being recognized as speech), try increasing it to 0.6 or 0.7. If many speech segments are being lost, try decreasing it to 0.3 or 0.4. Test gradually based on audio quality.

min_speech_duration_ms (Minimum Speech Duration, unit: milliseconds)

Function: Speech segments shorter than this value will be merged with preceding or following subtitles to reach this duration.

Adjustment Suggestion: The default value is 1000 milliseconds. If you have selected voice cloning, i.e., the clone voice role, ensure this value is not less than 3000.

max_speech_duration_s (Maximum Speech Duration, unit: seconds)

Function: Limits the maximum length of a single speech segment. When the duration exceeds this limit, the system will split it at a silence point lasting more than 100 milliseconds. If no such silence exists, it will force a split just before the specified duration to avoid overly long segments.

Adjustment Suggestion: The default value is 5 seconds. If you need to control segment length (e.g., for dialogue segmentation), you can set it to 10 seconds or 30 seconds, adjusting according to your specific needs.

min_silence_duration_ms (Silence Split Duration, unit: milliseconds)

Function: After speech ends, the system waits for silence lasting this duration before finalizing the speech segment split.

Adjustment Suggestion: The default value is 600 milliseconds. If you need more complete sentences, you can increase it to 1000-2000 milliseconds. If you want finer segmentation, you can decrease it to 200.

Adjustment Tips

- Prioritize Audio Quality: While parameter tuning is important, a clean audio background has a greater impact on recognition results. Try to use clear, noise-free audio.

- Test Gradually: Start with the default values and adjust parameters one by one, observing changes in the subtitles to find the optimal settings.

- Adapt to the Scenario: Adjust

max_speech_duration_sandmin_silence_duration_msbased on the audio type (e.g., dialogue, monologue).

Summary

- threshold: Adjust based on audio characteristics; the default 0.5 is generally suitable.

- min_speech_duration_ms and min_silence_duration_ms: Control speech segment length and split sensitivity.

- max_speech_duration_s: Limits long segments, suitable for segmentation needs.

Properly setting these parameters can significantly improve VAD performance and generate more accurate subtitles.