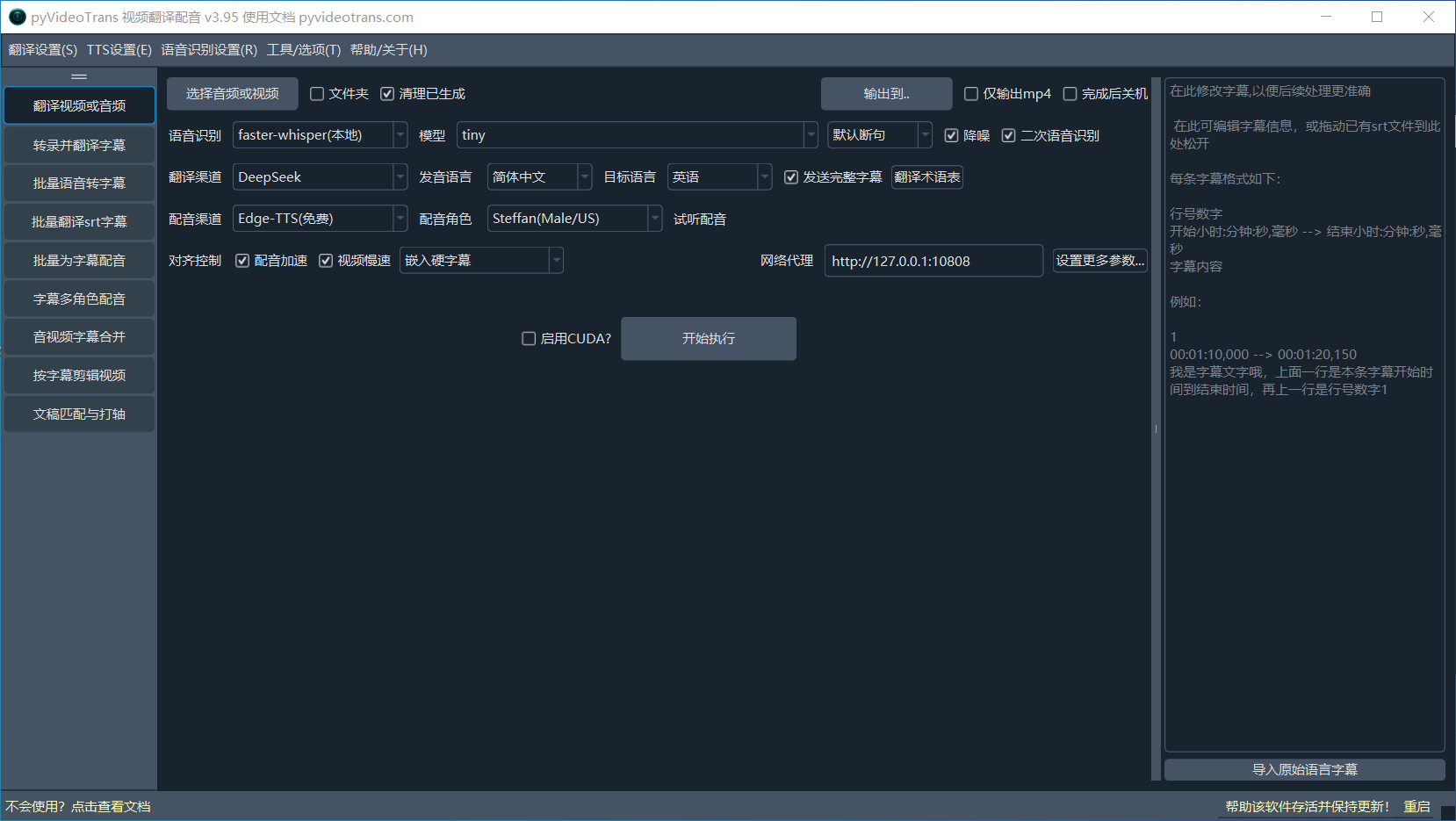

Translate Video and Audio

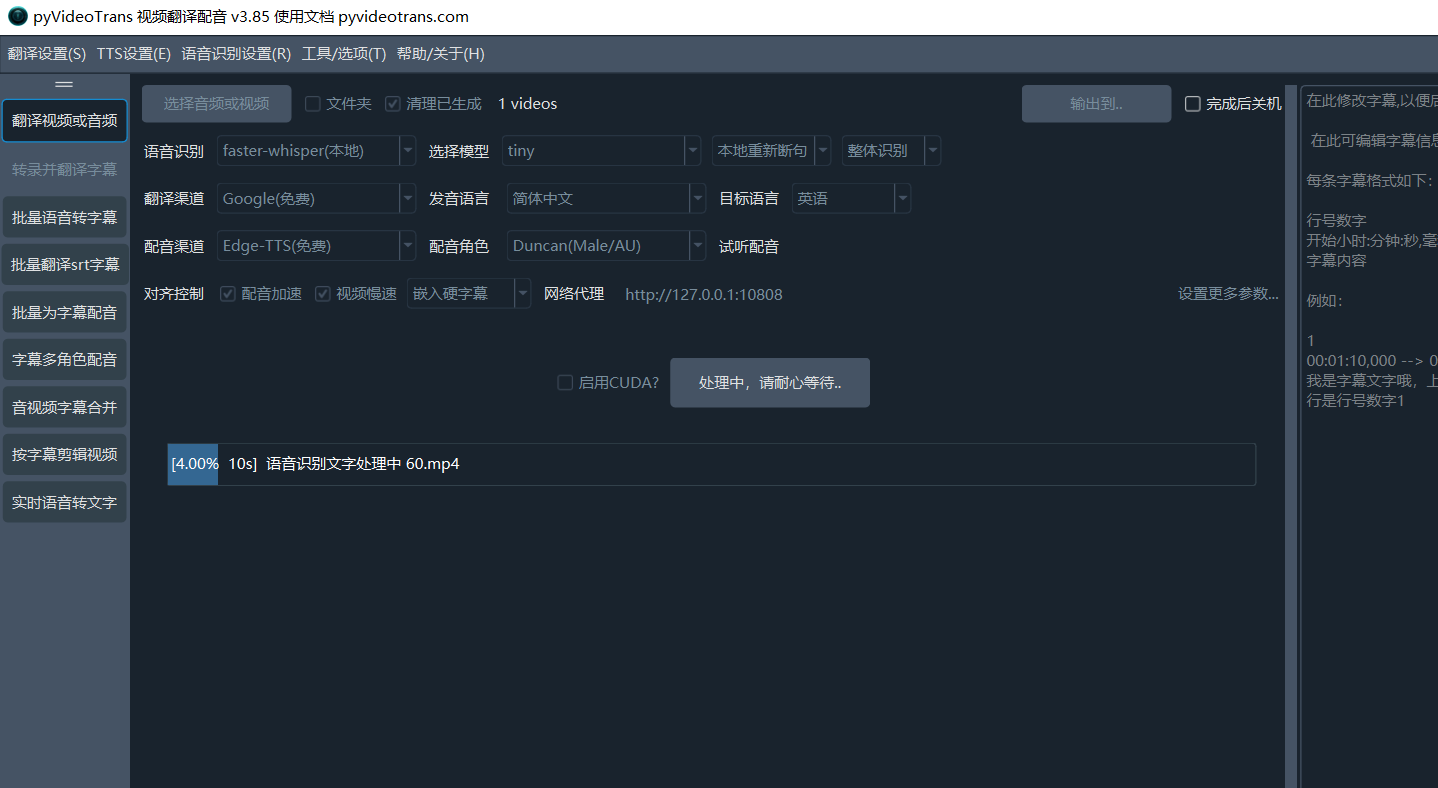

This is the core function of the software and the default interface upon startup.

The following will guide you step-by-step through completing a full video/audio translation task.

Line 1: Select the Video to Translate

Supported video formats:

mp4/mov/avi/mkv/webm/mpeg/ogg/mts/tsSupported audio formats:

wav/mp3/m4a/flac/aac

Select Audio or Video: Click this button to select one or multiple video/audio files for translation (holdCtrlto select multiple).Folder: Check this option to batch process all videos within an entire folder.Clean Generated: Check this if you need to reprocess the same video (instead of using cached files).Output to..: By default, translated files are saved to the_video_outfolder within the original video's directory. Click this button to set a separate output directory for translated videos.Shutdown After Completion: Automatically shuts down the computer after processing all tasks, suitable for large-scale, long-duration tasks.

Line 2: Speech Recognition Channels

Speech Recognition: Used to transcribe speech from audio or video into subtitle files. The quality of this step directly determines the final outcome. Supports over ten different recognition methods.faster-whisper(local): This is a local model (requires an online download on first run). It offers good speed and quality. If you have no special requirements, you can choose it. It has over ten models of different sizes. The smallest, fastest, and most resource-efficient model istiny, but its accuracy is very low and not recommended. The best performing models arelarge-v2/large-v3, which are recommended. Models ending in.enor starting withdistil-only support videos with English speech.openai-whisper(local): Basically similar to the model above, but slightly slower. Accuracy might be a bit higher. Also recommended to chooselarge-v2/large-v3models.Ali FunASR(local): Alibaba's local recognition model, performs well with Chinese. If your original video contains Chinese speech, you can try using it. Also requires an online model download on first run.Default Segmentation | LLM Segmentation: You can choose default segmentation or use a Large Language Model (LLM) to intelligently segment the recognized text and optimize punctuation.Secondary Recognition: When dubbing is selected and a single embedded subtitle is chosen, you can enable secondary recognition. After dubbing is complete, the dubbed file will be transcribed again to generate shorter subtitles embedded in the video, ensuring precise alignment between subtitles and dubbing.Additionally, it supports various online APIs and local models such as ByteDance Volcano Subtitle Generation, OpenAI Speech Recognition, Gemini Speech Recognition, Alibaba Qwen3-ASR Speech Recognition, etc.

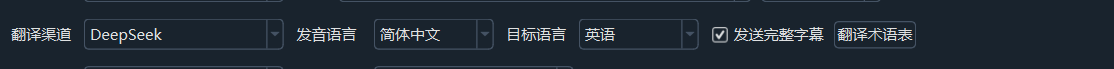

Line 3: Translation Channels

Translation Channels: Translation channels are used to translate the transcribed original language subtitle file into the target language subtitle file. Over ten built-in translation channels are available for selection.

- Free Traditional Translation: Google Translate (requires proxy), Microsoft Translator (no proxy needed), DeepLX (requires self-deployment)

- Paid Traditional Translation: Baidu Translate, Tencent Translate, Alibaba Machine Translation, DeepL

- AI Intelligent Translation: OpenAI ChatGPT, Gemini, DeepSeek, Claude, Zhipu AI, Silicon Flow, 302.AI, etc. Requires your own SK key to be filled in

Menu - Translation Settings - Corresponding Channel Settings Panel. - Compatible AI/Local Models: Also supports self-deployed local large models. Simply choose the

Compatible AI/Local Modelschannel and fill in the API address inMenu - Translation Settings - Local Large Model Settings. - Source Language: Refers to the language spoken by people in the original video. Must be selected correctly. If unsure, you can choose

auto. - Target Language: The language you want the audio/video to be translated into.

- Translation Glossary: Used for AI translation, sending a glossary to the AI.

- Send Complete Subtitles: Used for AI translation, sending line numbers and timestamps along with the subtitle content to the AI.

Line 4: Dubbing Channels

Dubbing Channels: The translated subtitle file will be dubbed using the channel specified here. Supports online dubbing APIs such as OpenAI TTS / Alibaba Qwen-TTS / Edge-TTS / Elevenlabs / ByteDance Volcano Speech Synthesis / Azure-TTS / Minimaxi, etc. Also supports locally deployed open-source TTS models, such as IndexTTS2 / F5-TTS / CosyVoice / ChatterBox / VoxCPM, etc. Among them, Edge-TTS is a free dubbing channel, ready to use out of the box. For channels that require configuration, fill in the relevant information inMenu -- TTS Settings -- Corresponding Channel Panel.

- Voice Role: Each dubbing channel generally has multiple voice roles to choose from. After selecting the Target Language, you can then select a voice role.

- Preview Dubbing: After selecting a voice role, you can click to preview the sound effect of the current role.

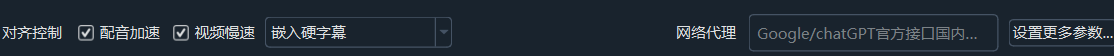

Line 5: Synchronization, Alignment, and Subtitles

Due to different speech rates across languages, the duration of the dubbed translation may not match the original video. Adjustments can be made here. Primarily adjusts for situations where the dubbed duration is longer than the original, to avoid sound overlap or video ending before the sound finishes. No processing is done for situations where the dubbed duration becomes shorter.

Speed Up Dubbing: If a specific dubbed segment is longer than the original audio segment, speed up the dubbing to match the original duration.Slow Down Video: Similarly, when a dubbed segment is longer than the video, slow down the playback speed of that video segment to match the dubbing duration. (If selected, processing will be more time-consuming and generate many intermediate segments. To minimize quality loss, the overall file size will be several times larger than the original video.)No Embedded Subtitles: Only replace the audio, do not add any subtitles.Embed Hard Subtitles: Permanently "burn" subtitles into the video frame. They cannot be turned off and will be displayed when played anywhere.Embed Soft Subtitles: Package subtitles as an independent track into the video. Players can choose to turn them on or off. Subtitles cannot be displayed when playing in web browsers.(Dual): Each subtitle line consists of two rows: the original language subtitle and the target language subtitle.- Network Proxy: For users in mainland China, using foreign services like Google, Gemini, OpenAI, etc., requires a proxy. If you have VPN services and know the proxy port number, you can fill it in here, in a form similar to

http://127.0.0.1:7860.

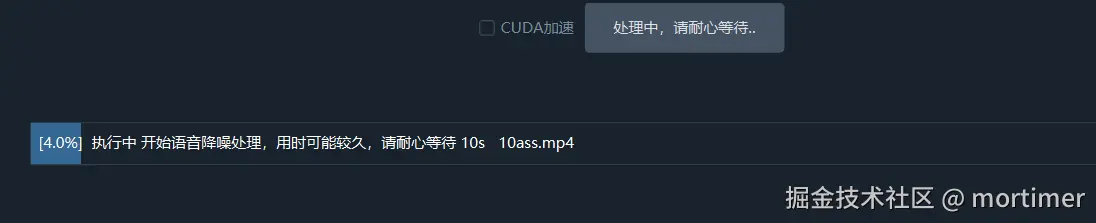

Line 6: Start Execution

- CUDA Acceleration: On Windows and Linux, if you have an NVIDIA graphics card and have correctly installed the CUDA environment, be sure to check this option. It can increase speech recognition speed by several times or even dozens of times.

If you have multiple NVIDIA graphics cards, you can open

Menu -- Tools -- Advanced Options -- General Settings --and checkMulti-GPU Mode. The software will attempt to use multiple GPUs for parallel processing.

After all settings are complete, click the [Start Execution] button.

If multiple audio/video files are selected for translation at once, they will be processed concurrently and cross-executed without pausing in between.

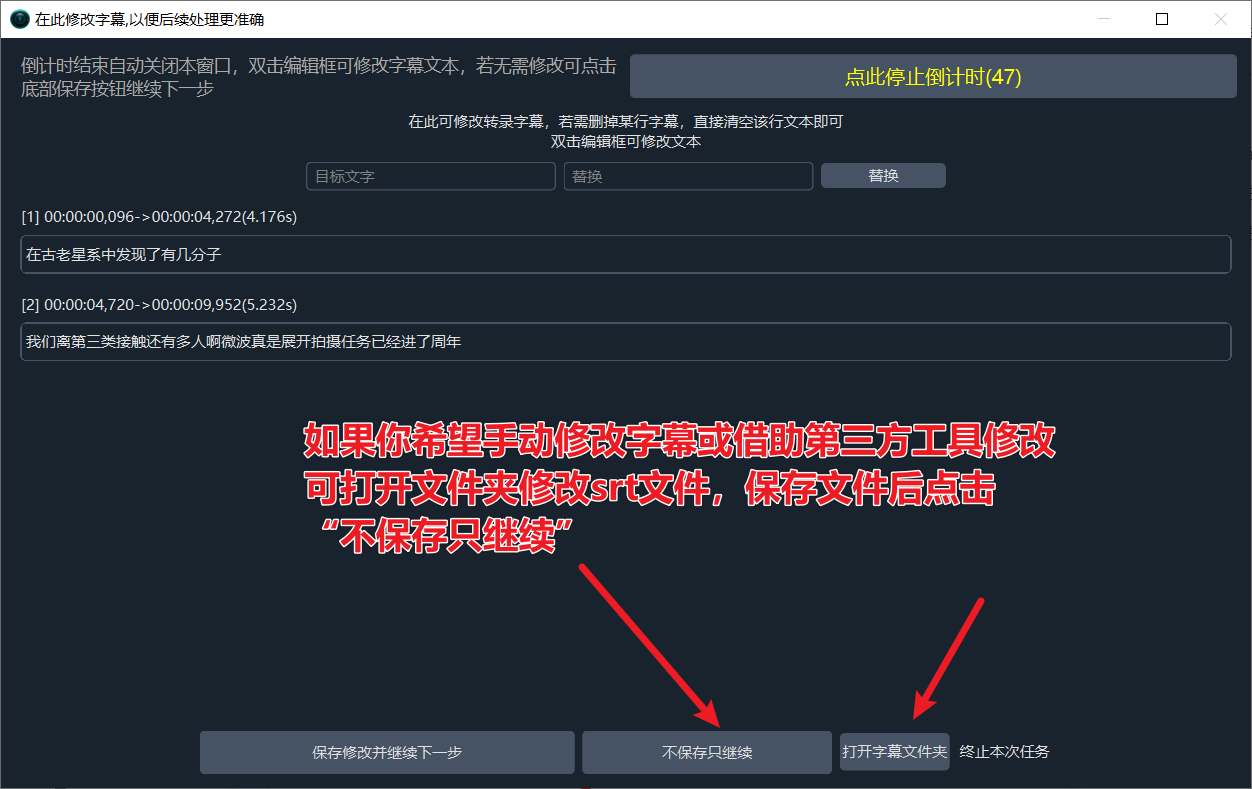

When only one video is selected at a time, after speech transcription is complete, a separate subtitle editing window will pop up. You can modify the subtitles here to make subsequent processes more accurate.

First Modification Opportunity: After the speech recognition stage is complete, the subtitle editing window pops up.In the window that pops up after subtitle translation is complete, you can set different voice roles for each speaker, and even assign a specific voice role to each individual subtitle line.

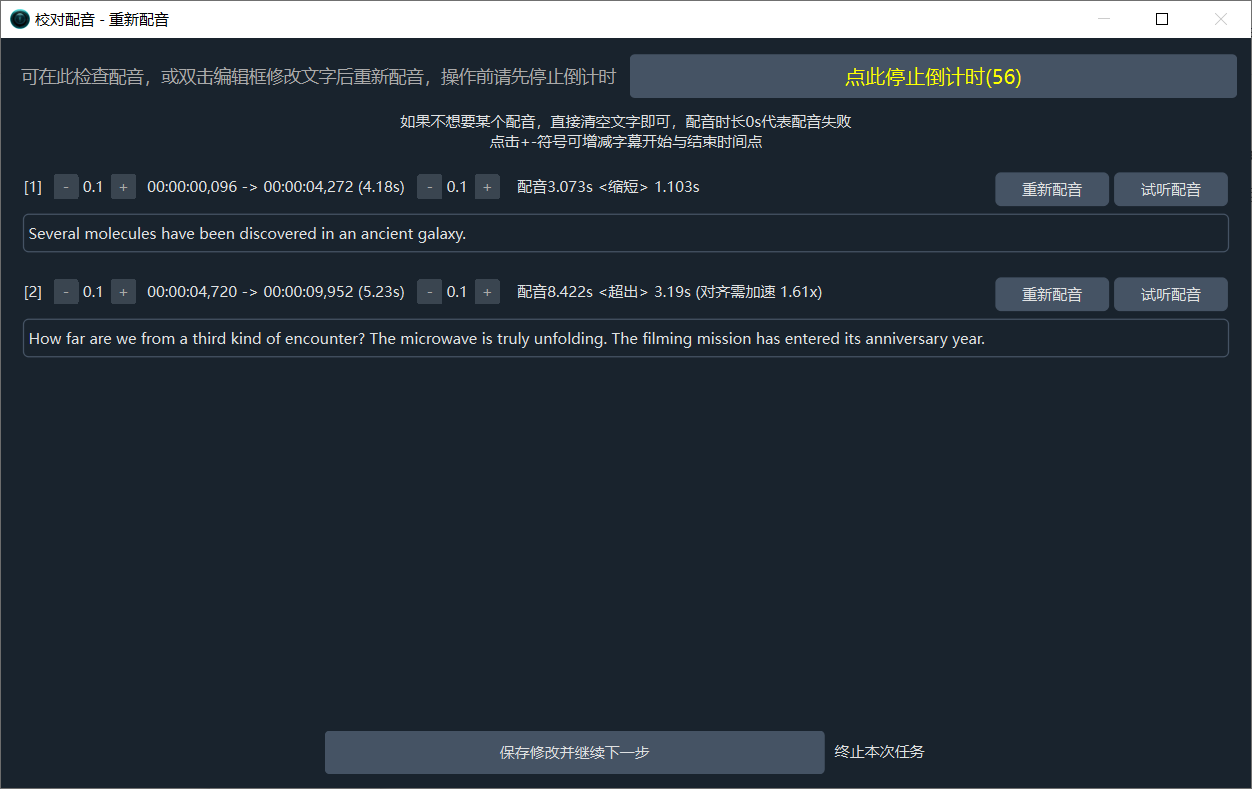

Second Modification Opportunity: After the subtitle translation stage is complete, the subtitle editing and voice role modification window pops up.

Third Modification Opportunity: After dubbing is complete, you can check again or re-dub each subtitle line.

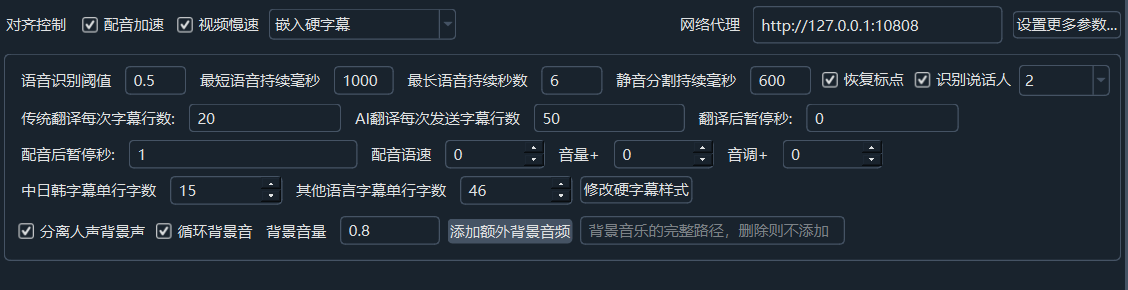

Line 7: Set More Parameters

If you want finer control, such as speech rate, volume, characters per subtitle line, noise reduction, speaker recognition, etc., you can click Set More Parameters.... After clicking, it looks like this:

Noise Reduction: If selected, an Alibaba model from modelscope.cn will be downloaded online before speech recognition to eliminate noise from the audio, improving recognition accuracy.

Speaker Recognition: If selected, after speech recognition is complete, the software will attempt to recognize and distinguish between speakers (accuracy is limited). The following number represents the pre-set number of speakers to identify. If known in advance, it can increase accuracy. Default is unlimited. In advanced options, you can switch speaker models (built-in, Alibaba cam++, payanote, etc.).

Dubbing Speed: Default is 0. Filling in

50means the speech rate increases by 50%,-50means it decreases by 50%.Volume +: Also defaults to 0. Filling in

50means volume increases by 50%,-50means it decreases by 50%.Pitch +: Default is 0.

20means pitch increases by 20Hz, making it sharper. Conversely,-20decreases by 20Hz, making it deeper.Speech Threshold: Represents the minimum probability for an audio segment to be considered speech. VAD calculates a speech probability for each audio segment. Parts exceeding this threshold are considered speech, otherwise considered silence or noise. Default is 0.5. Lower values are more sensitive but may mistake noise for speech.

Minimum Speech Duration (ms): Limits the minimum duration of a speech segment. If you have selected voice cloning, keep this value >=3000.

Maximum Speech Duration (s): Limits the maximum length of a single speech segment. Forces segmentation when exceeding this duration. Fill in a number, unit is seconds. Default is 6 seconds. Do not exceed 30 seconds.

Silence Split Duration (ms): At the end of speech, waits for silence to reach this value before segmenting the speech segment. Fill in a number, unit is milliseconds. Default is 500ms, meaning segmentation only occurs at silence segments longer than this value.

Traditional Translation Channel Lines per Batch: Number of subtitle lines sent per batch for traditional translation channels.

AI Translation Channel Lines per Batch: Number of subtitle lines sent per batch for AI translation channels.

Send Complete Subtitles: Whether to send complete subtitle format content when using AI translation channels.

Pause After Translation (s): Pause time in seconds after each translation, used to limit request frequency.

Pause After Dubbing (s): Pause time in seconds after each dubbing, used to limit request frequency.

CJK Characters per Line: When embedding subtitles in video, the maximum number of characters per line for Chinese, Japanese, and Korean languages.

Other Languages Characters per Line: When embedding subtitles in video, the maximum number of characters per line for non-CJK languages.

Modify Hard Subtitle Style: Clicking will pop up a dedicated hard subtitle style editor.

Separate Voice and Background: If selected, will separate the background music/accompaniment and speech from the video. When the final dubbed merge is completed, the background accompaniment will be embedded back in (this step is relatively slow).

Loop Background Audio: If the background audio duration is shorter than the final video duration, selecting this will loop the background audio; otherwise, it will be filled with silence.

Background Volume: Settings for the new background volume after re-embedding. Default is 0.8, meaning the volume is reduced to 0.8 times the original.

Add Extra Background Audio: You can also select a local audio file as a new background accompaniment.

Restore Punctuation: If selected, will attempt to add punctuation marks after recognition.

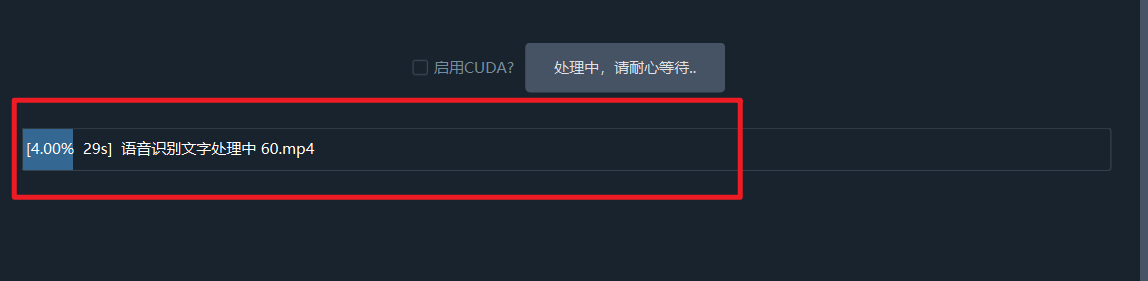

Line 8: Progress Bar

After the task is completed, click on the bottom progress bar area to open the output folder. You will see the final MP4 file, as well as materials generated during the process such as SRT subtitle files, dubbed audio files, etc.