🧠 WhisperX API, Usage in pyVideoTrans

WhisperX is a very powerful speech recognition model that also supports Speaker Diarization. However, the official version only provides a command-line tool, which is not very user-friendly for beginners, and it doesn't offer an API.

So I created an enhanced version: whisperx-api! Based on the original model, it adds:

- ✅ Local Web Interface — Open your browser to use it, upload files and transcribe with one click.

- ✅ OpenAI-Compatible API — Can replace the original Whisper API, directly integrate into your projects.

- ✅ Speaker Diarization — Automatically identifies and labels different speakers.

- ✅ One-Click Startup — Uses the

uvtool for automatic environment setup.

Using it in the pyVideoTrans project

Required Pre-installed Tools

We need the following 2 tools:

- uv: An ultra-fast Python package manager, use it to set up the environment with one command.

- FFmpeg: A powerful audio/video processing tool for format conversion.

⚠️ Note: For detailed installation guides for

uvandffmpeg, please refer to my dedicated tutorial: https://pyvideotrans.com/blog/uv-ffmpeg

VPN / Proxy Tool

WhisperX needs to download models from overseas servers. Please ensure your VPN/proxy tool is enabled and stable. It's recommended to enable system proxy or global mode in your VPN, otherwise the models cannot be downloaded normally.

🚀 Three Steps to Get Started, One-Click API Service Launch!

✅ Step 1: Download the Project Code

Visit the project homepage: https://github.com/jianchang512/whisperx-api

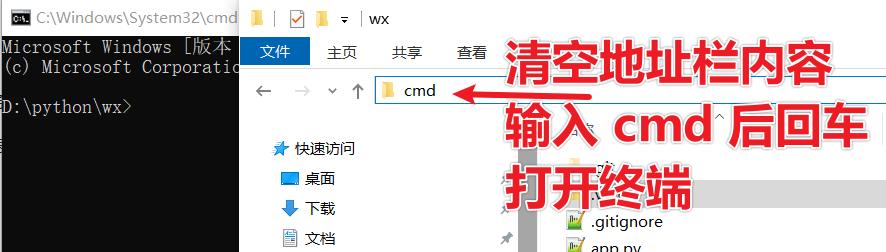

Click the green "Code" button → "Download ZIP" to download the archive and extract it. Then navigate into the folder containing the app.py and index.html files.

Clear the address bar in the folder, type cmd, and press Enter to open a black terminal window.

✅ Step 2: Obtain the Access Token for Downloading the "Speaker Recognition" Model (Skip if you don't need speaker diarization)

The speaker diarization model requires agreeing to their terms before download, so you need to first "sign the agreement" on the Hugging Face website and obtain an access token. This step requires a VPN, otherwise the website cannot be accessed.

① Register and Log in to Hugging Face

Visit: https://huggingface.co/ Create a free account and log in.

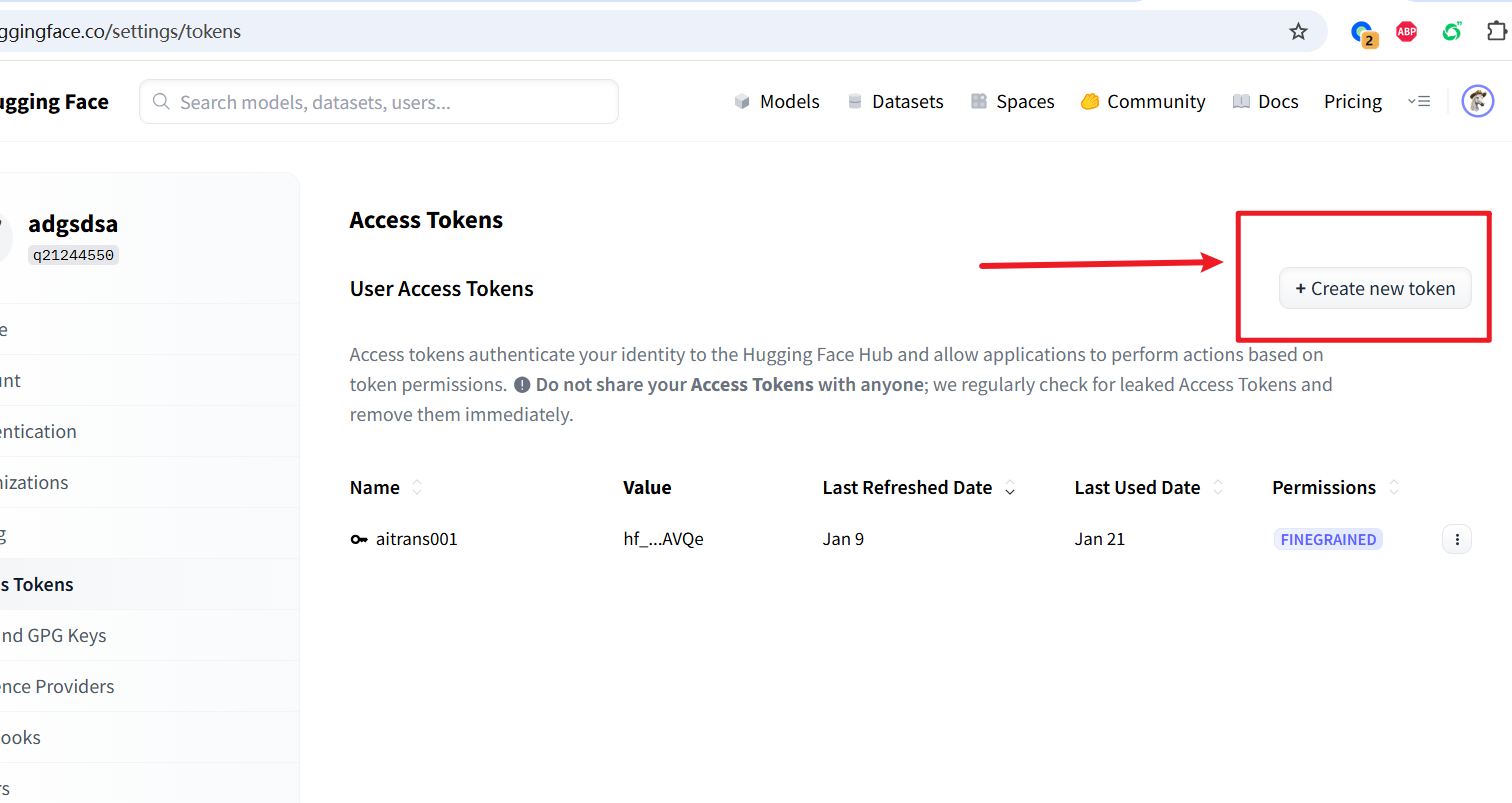

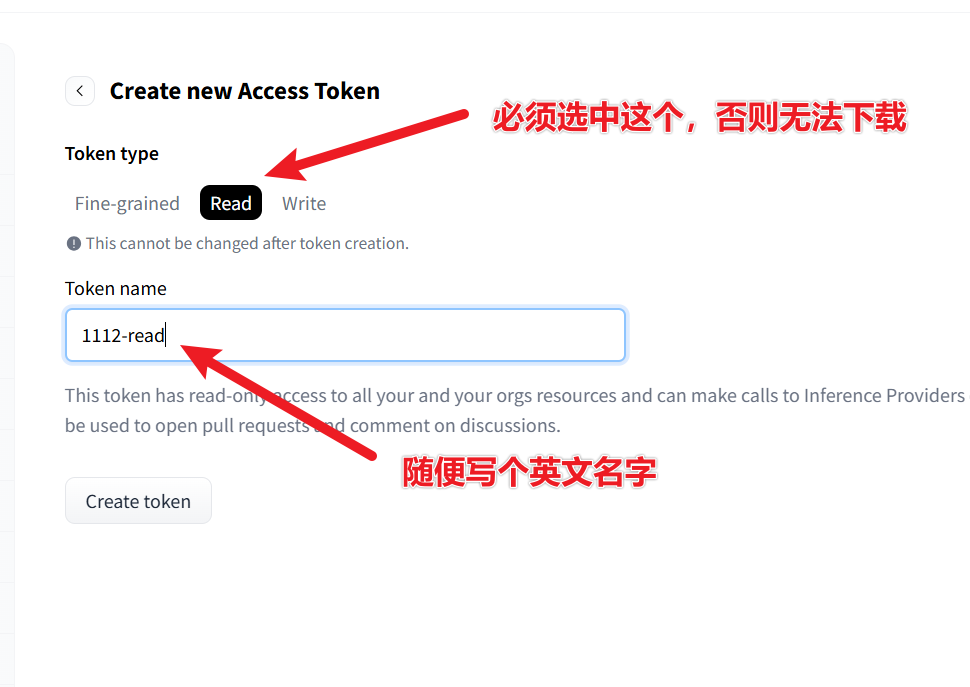

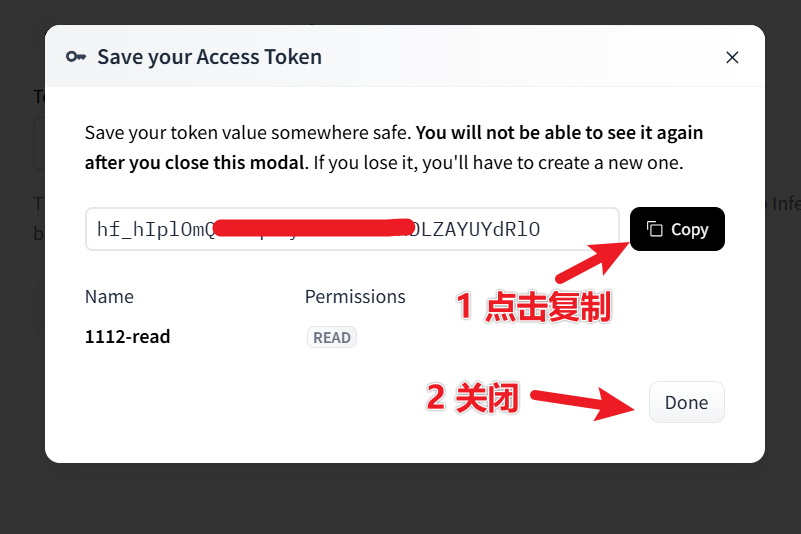

② Create an Access Token

Visit: https://huggingface.co/settings/tokens Click "New token" → Select read permission → Create and copy the token string starting with hf_.

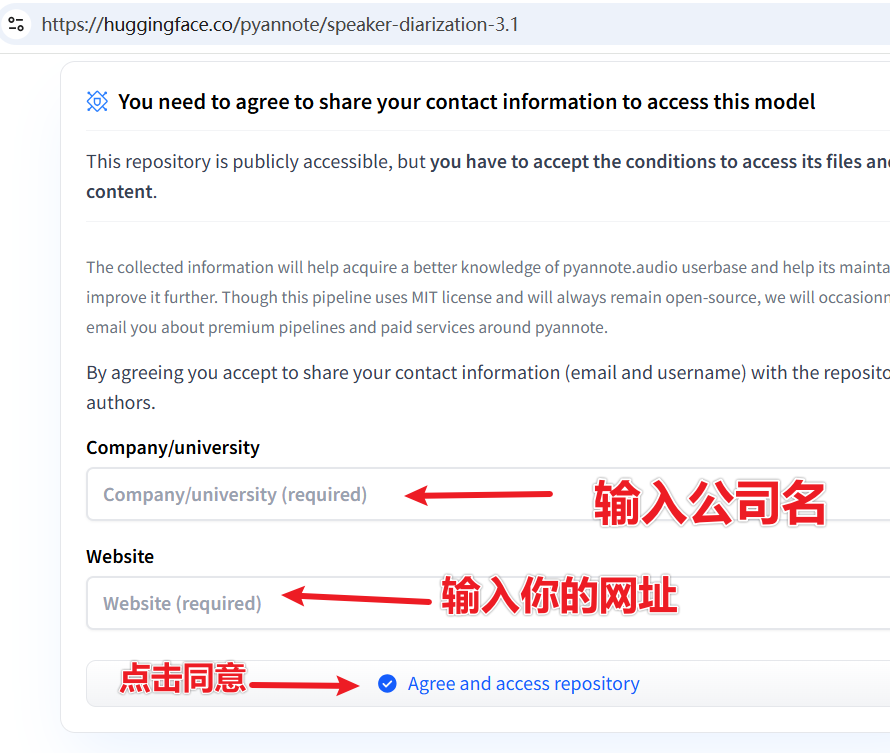

③ Agree to the Model Usage Terms (Must check the box!)

Visit the following two model pages one by one and agree to the terms:

- https://huggingface.co/pyannote/speaker-diarization-3.1

- https://huggingface.co/pyannote/segmentation-3.0

On each page, fill in the two displayed text boxes, then click the submit button.

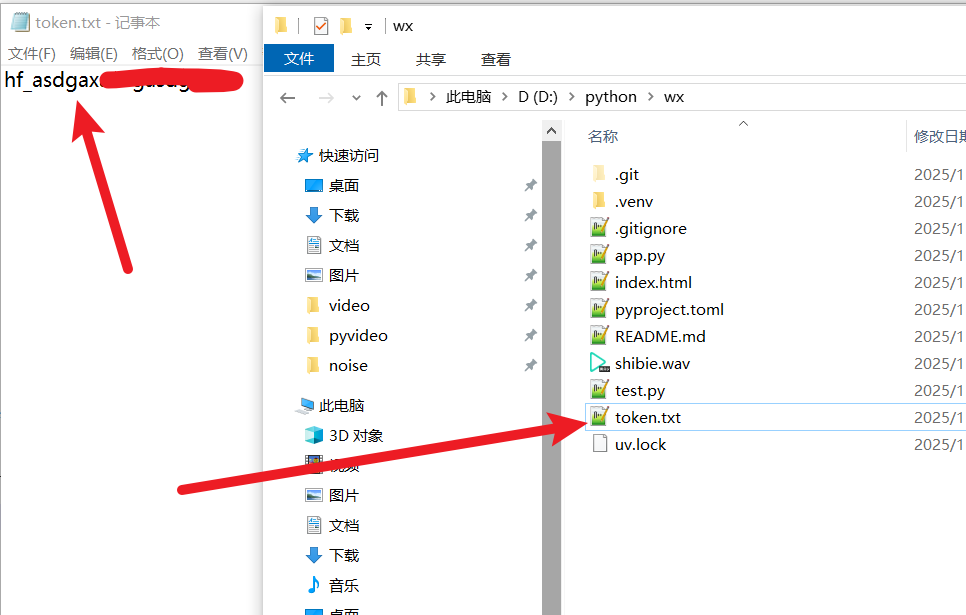

④ Save the Token

Return to your whisperx-api project folder. Create a new file named token.txt. Paste the copied hf_ token into it and save.

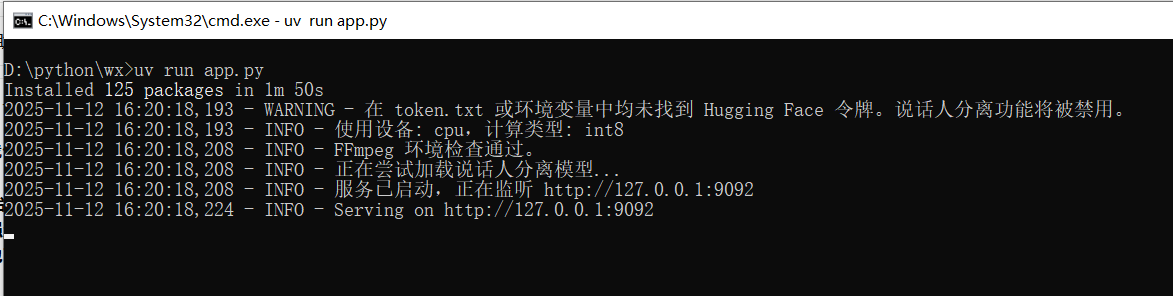

✅ Step 3: One-Click Launch!

Ensure your cmd terminal is still in the folder containing app.py, then execute:

Then execute the startup command; you'll only need to run this command in the future as well.

uv run app.pyThe first time you run it, it will take a while to install modules and dependencies. Please be patient.

When you see output similar to the image below, it means the launch was successful 👇

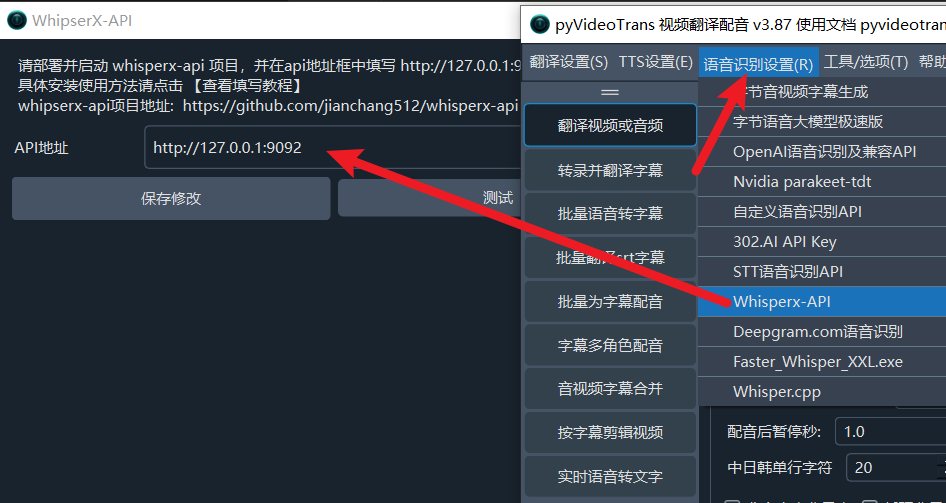

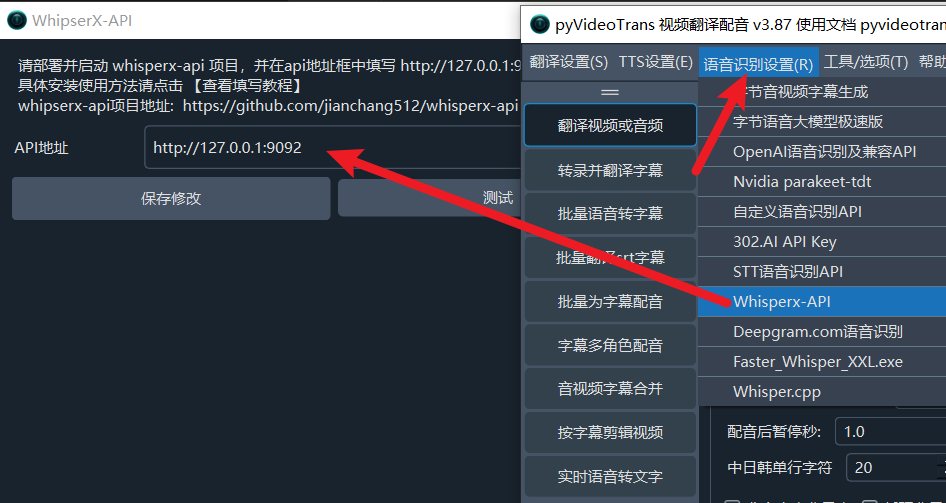

Your browser will automatically open this address: http://127.0.0.1:9092. Enter this address into the pyVideoTrans -- Menu -- Speech Recognition Settings -- WhisperxAPI window's API Address text box.

You will also see a clean web interface in your browser 👇. If you don't need to use the browser interface, you can close this page. However, do not close the cmd terminal if you need to use the API.

💡 Usage Guide

Now you can use it in two ways 👇

🌐 Method 1: Web Operation Interface

Upload File Click or drag an audio/video file into the dashed box.

Set Options

- Language: Select the corresponding language if known, otherwise choose "Auto Detect".

- Model: Larger models are more accurate but slower. Recommended:

large-v3-turbo. - Prompt: You can enter names, terms, etc., to improve recognition accuracy, e.g.,

OpenAI, WhisperX, PyTorch.

Start Transcription Click "Submit Transcription" and wait for processing to complete.

View and Download The results will be displayed below. You can edit them directly and then click "Download SRT File" to save.

⚙️ Method 2: API Call

Enter the address http://127.0.0.1:9092 into the pyVideoTrans -- Menu -- Speech Recognition Settings -- WhisperxAPI window's API Address text box.

❓ Frequently Asked Questions (FAQ)

Q: Getting "FFmpeg not found" error on startup? A: FFmpeg is either not installed or not added to the system environment variables. Please re-check the installation steps in the "Required Pre-installed Tools" section.

Q: Clicking the transcription button does nothing? A: The first run downloads models, please wait patiently. If there's an error, check the terminal logs. It's often due to the VPN not being enabled or being unstable.

Q: Why are there no [Speaker1], [Speaker2] labels? A:

- They won't appear if there's only one speaker in the audio.

- Or your Hugging Face Token is configured incorrectly, or you haven't agreed to the terms. Please re-check Step 2.

Q: Processing is too slow? A: It can be slow in CPU mode. Users with NVIDIA GPUs will experience speeds tens of times faster.